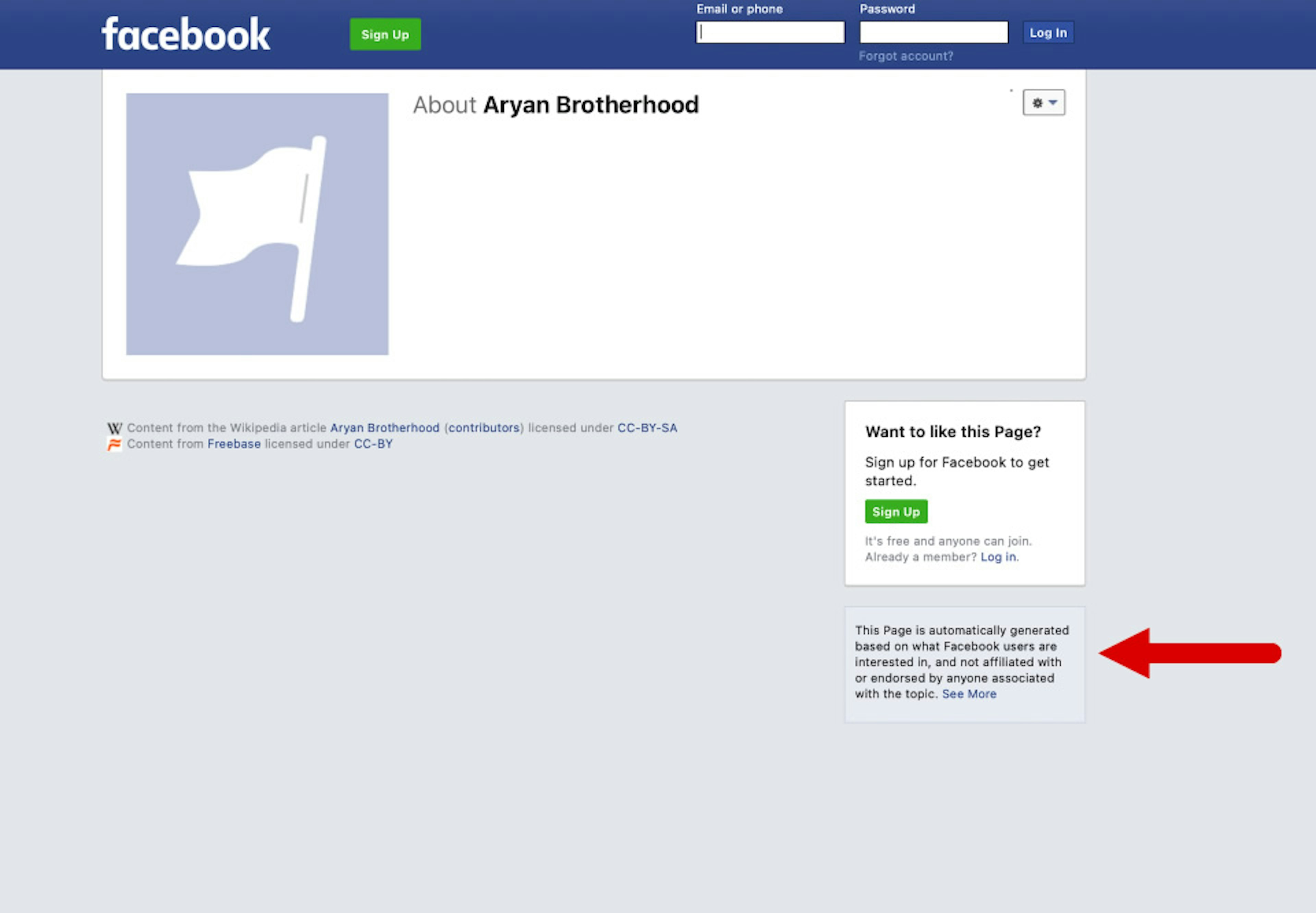

When Facebook Chief Product Officer Chris Cox was confronted with an image of an Aryan Brotherhood Facebook page at a congressional hearing this week, he bristled at the suggestion that his company had put up the page for the white supremacist prison gang.

“Senator, respectfully, we wouldn’t have put this page up ourselves,” Cox told Sen. Gary Peters (D-MI) during testimony Wednesday.

The truth is: Facebook not only put up the page, but actually created it.

For more than a decade, Facebook has been automatically generating pages for white supremacist groups, domestic militias, and foreign terrorist organizations—including groups that Facebook has explicitly banned from its platform.

Facebook has never explained why it does this, but the practice gives broader visibility to extremist organizations, many of which are involved in real-world violence.

The Tech Transparency Project (TTP) has been raising alarm about these auto-generated pages for several years. Here’s a primer on this Facebook feature—and why it’s dangerous.

What are auto-generated Facebook Pages?

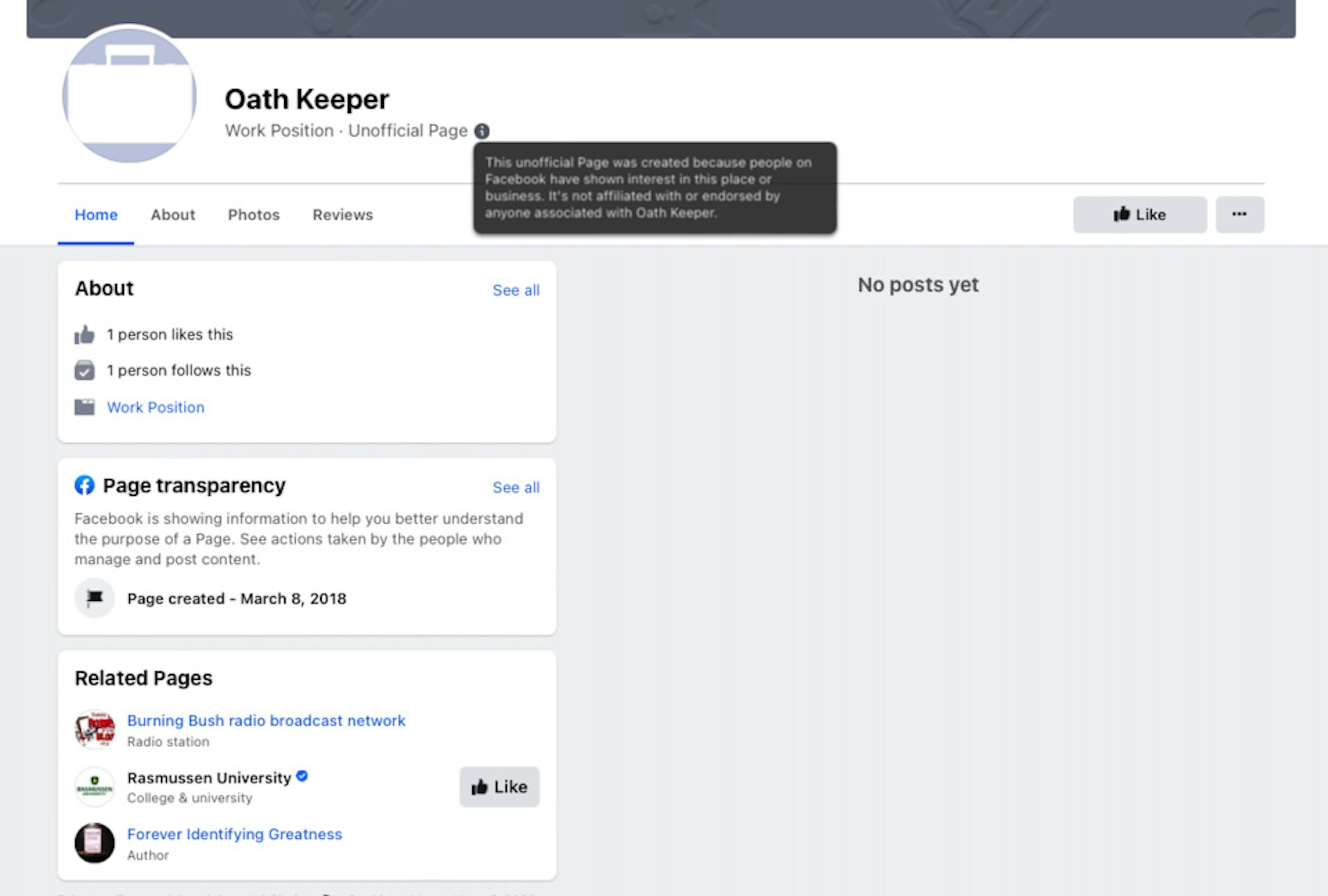

Facebook auto-generates pages on its platform when a user lists a job, interest, or location in their profile that does not have an existing page.

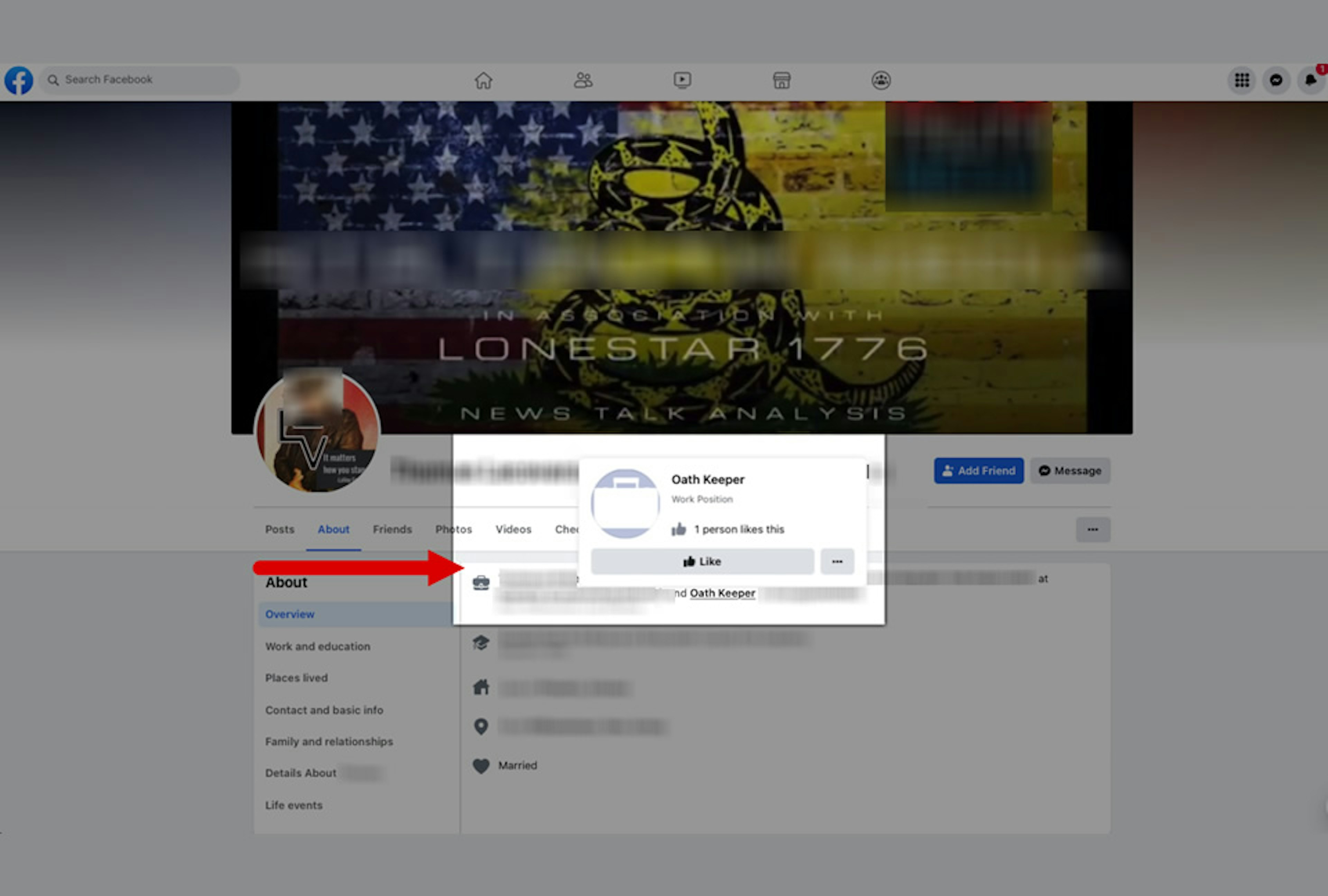

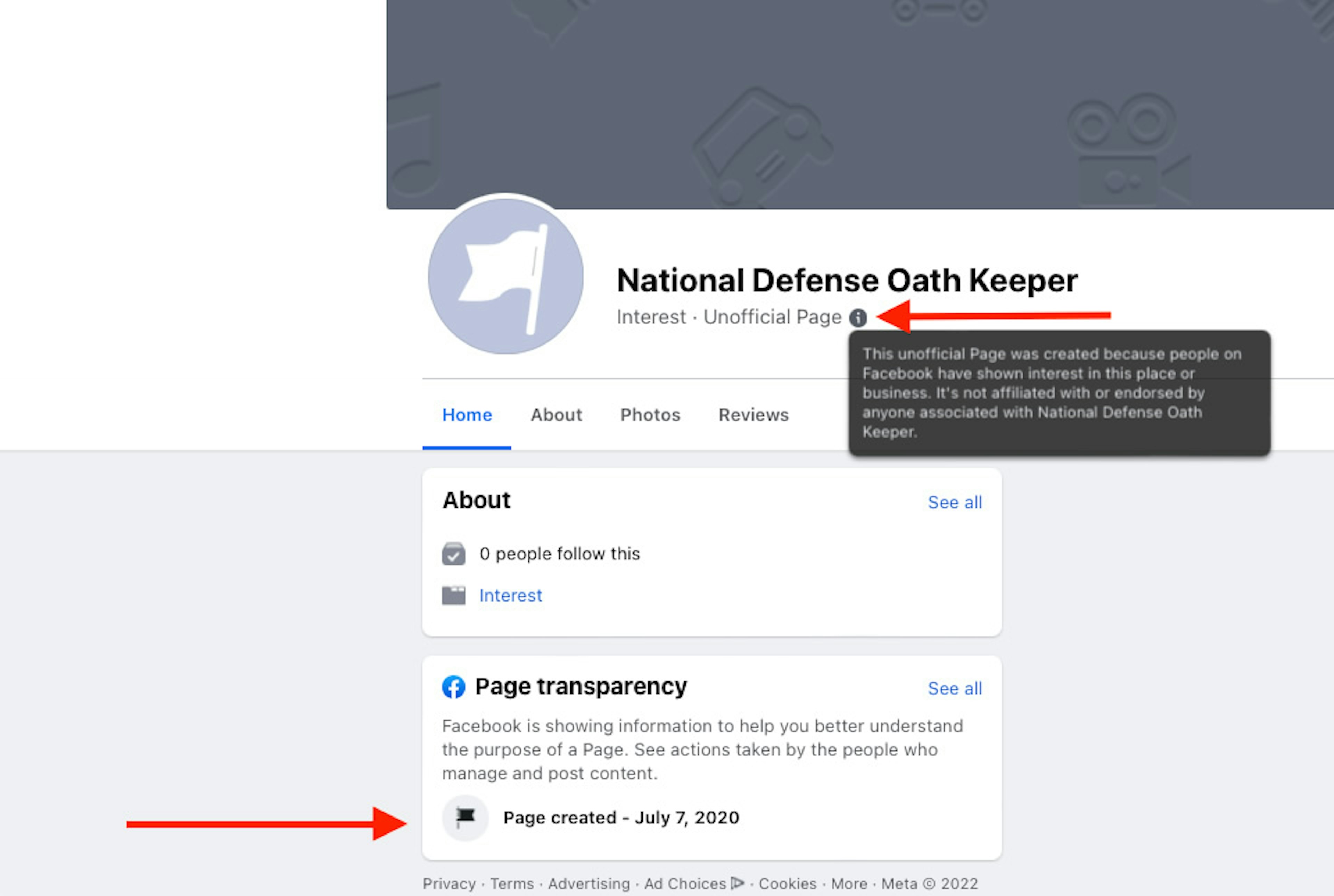

This can be relatively innocuous if the page pertains to a clothing store or donut shop. But Facebook also generates these pages for extremist and terrorist groups. For example, when a Facebook user lists their work position as “Oath Keeper”—a member of the far-right anti-government group—Facebook auto-generates a business page for that group.

A whistleblower first raised the issue of Facebook auto-generating pages for extremist groups in a 2019 petition filed with the Securities and Exchange Commission. The petition identified dozens of auto-generated pages for terrorist and white supremacist organizations.

Facebook hasn’t explained its reasoning for auto-generating pages. In a Help page about the feature that was later deleted, the company explained:

A Page may exist for your business even if someone from your business didn’t create it. For example, when someone checks into a place that doesn't have a Page, an unmanaged Page is created to represent the location. A Page may also be generated from a Wikipedia article.

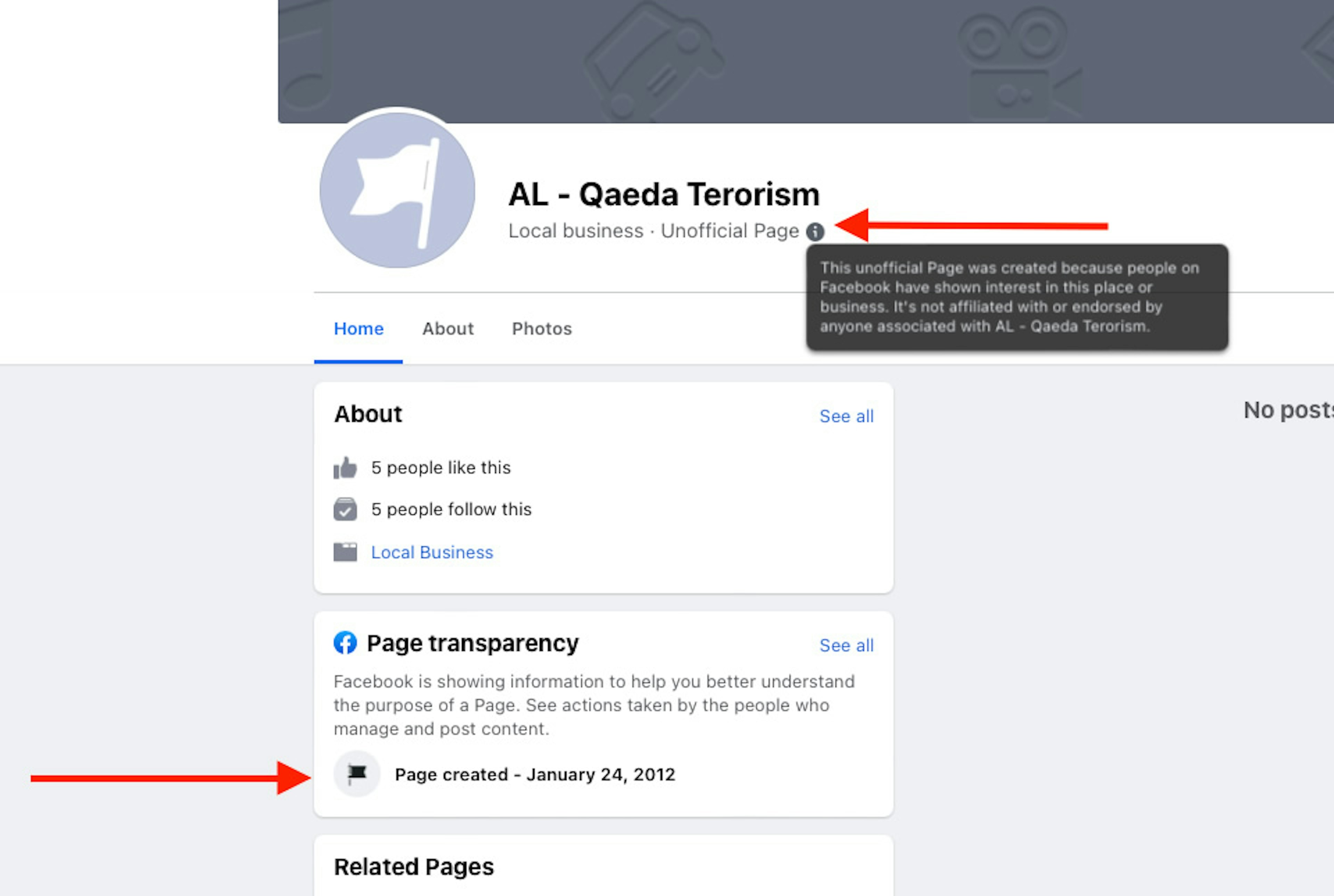

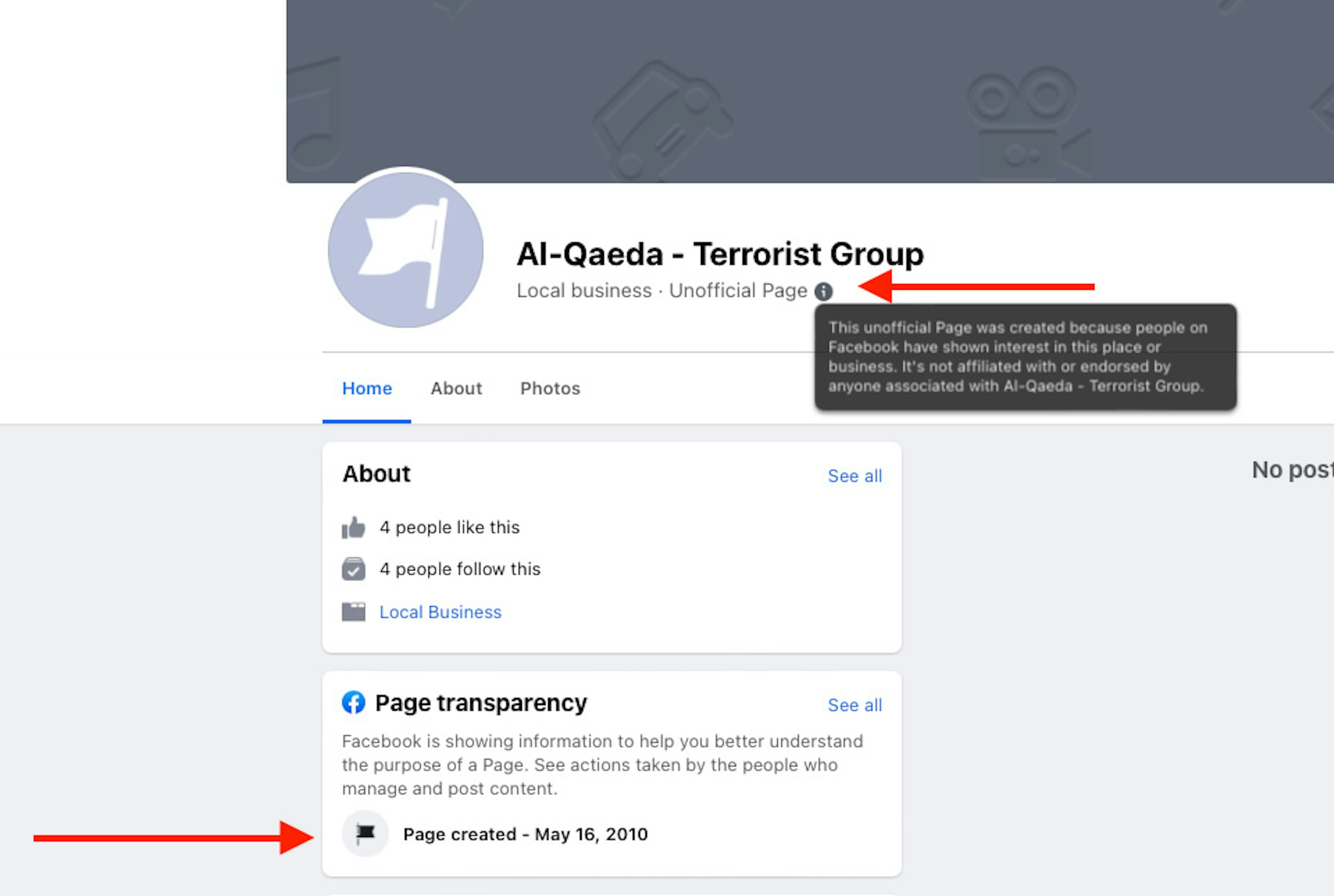

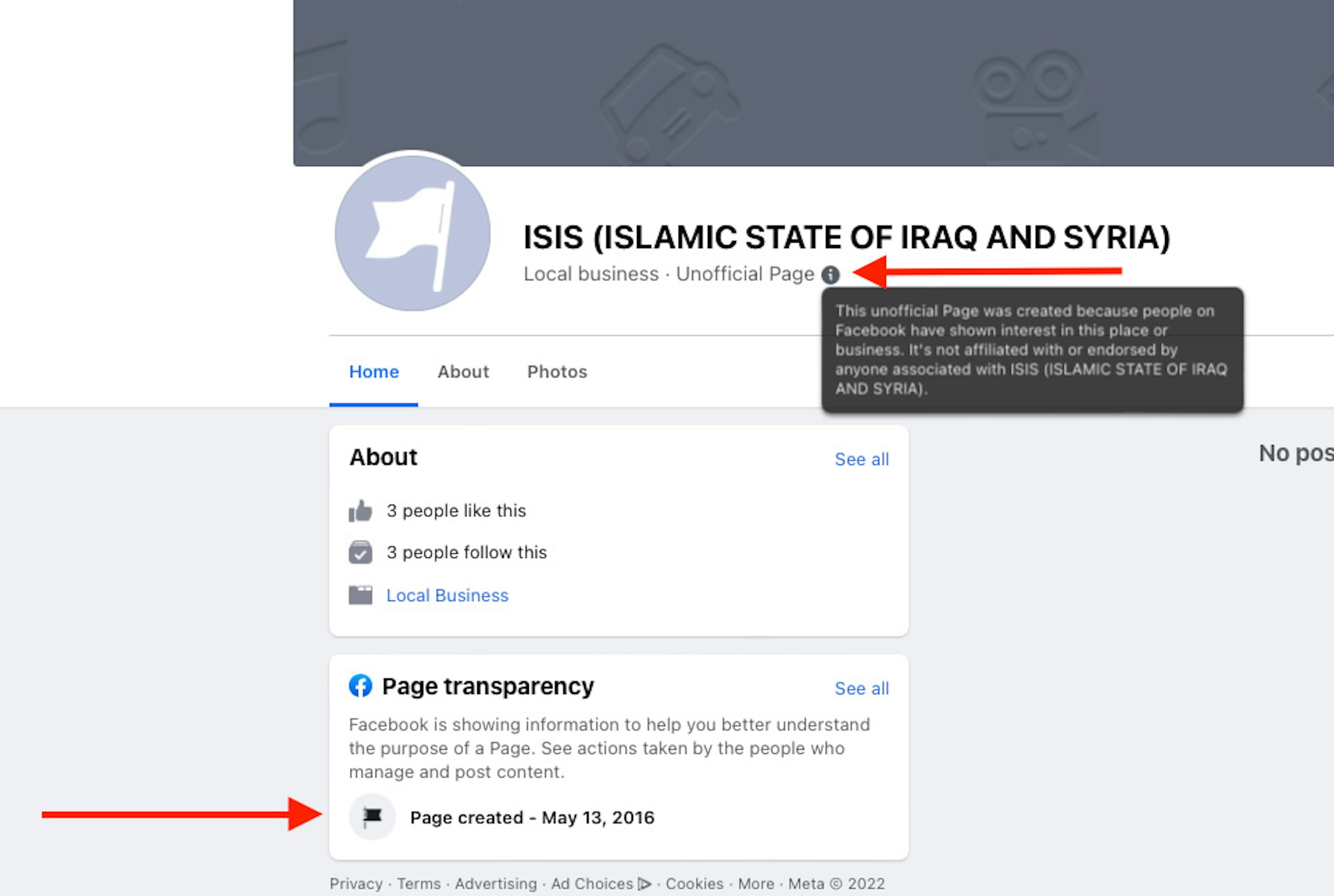

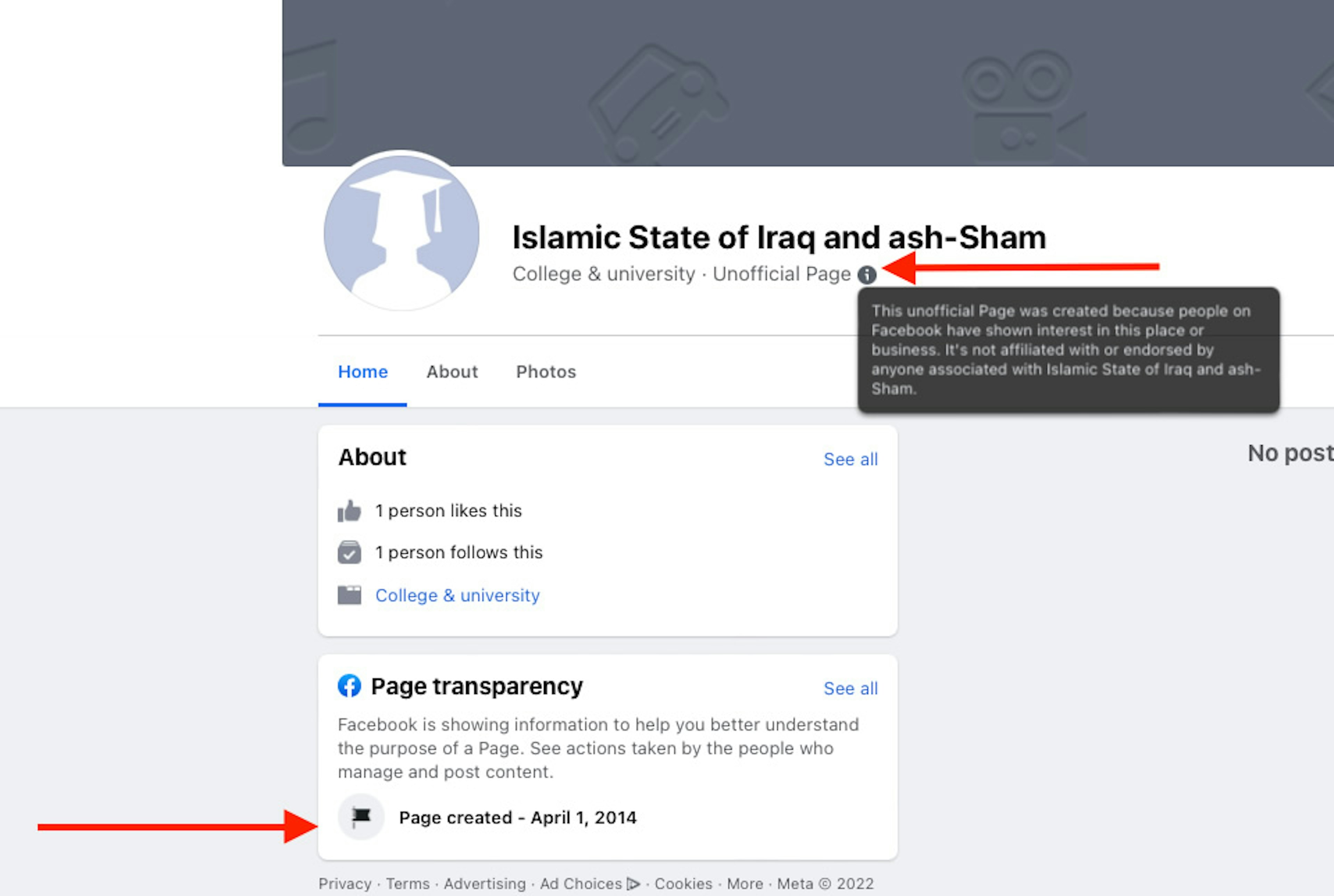

How can you tell a page is auto-generated?

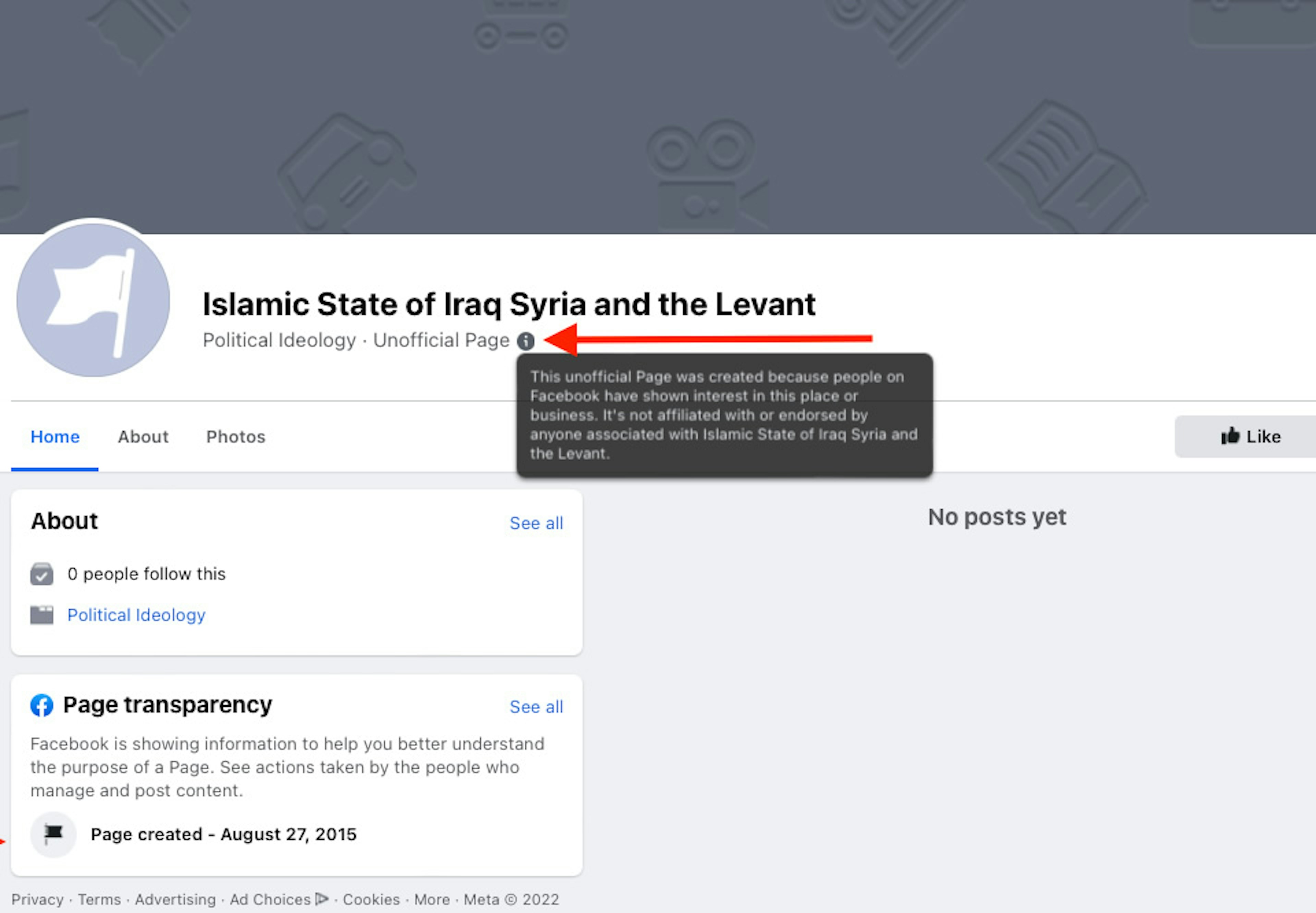

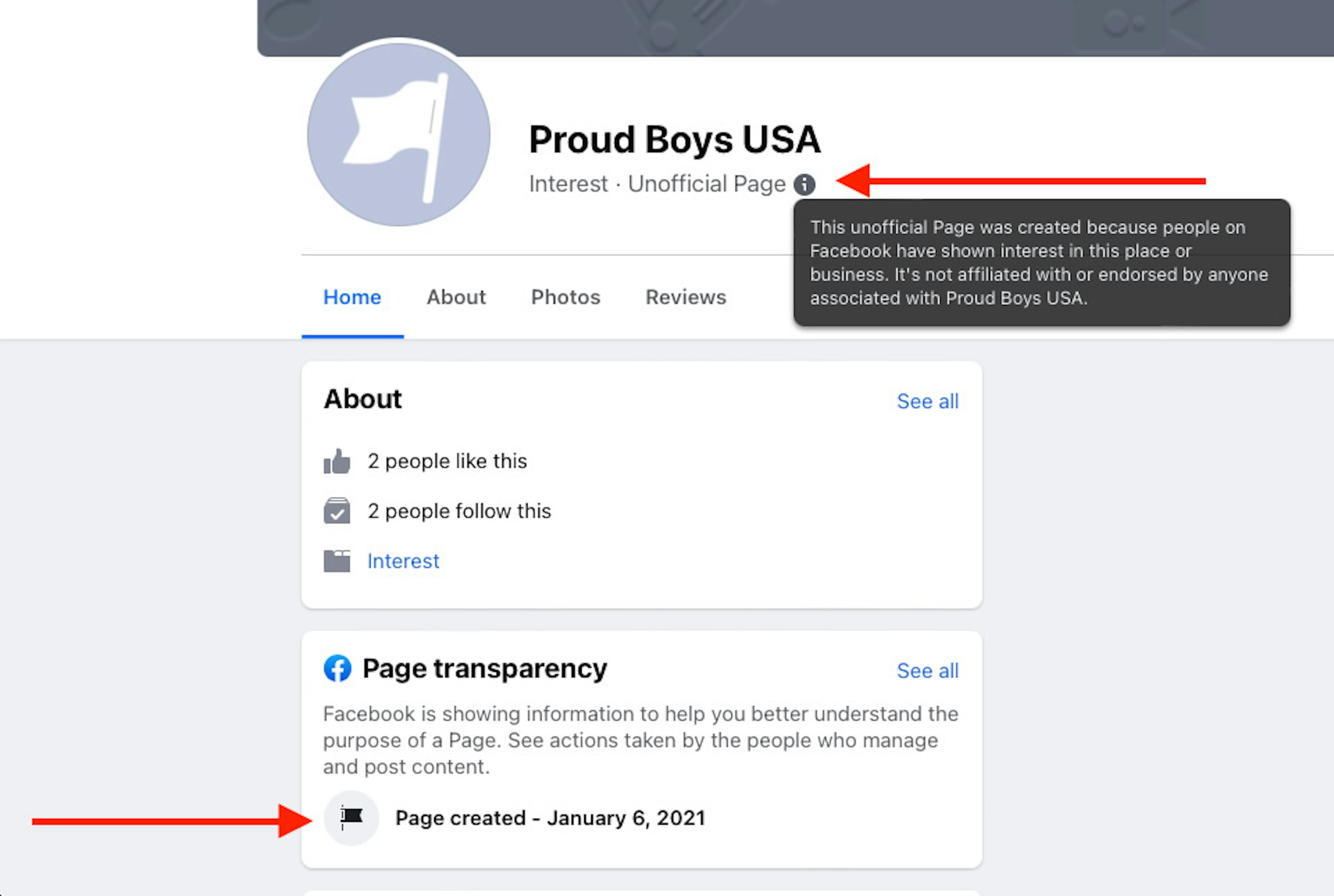

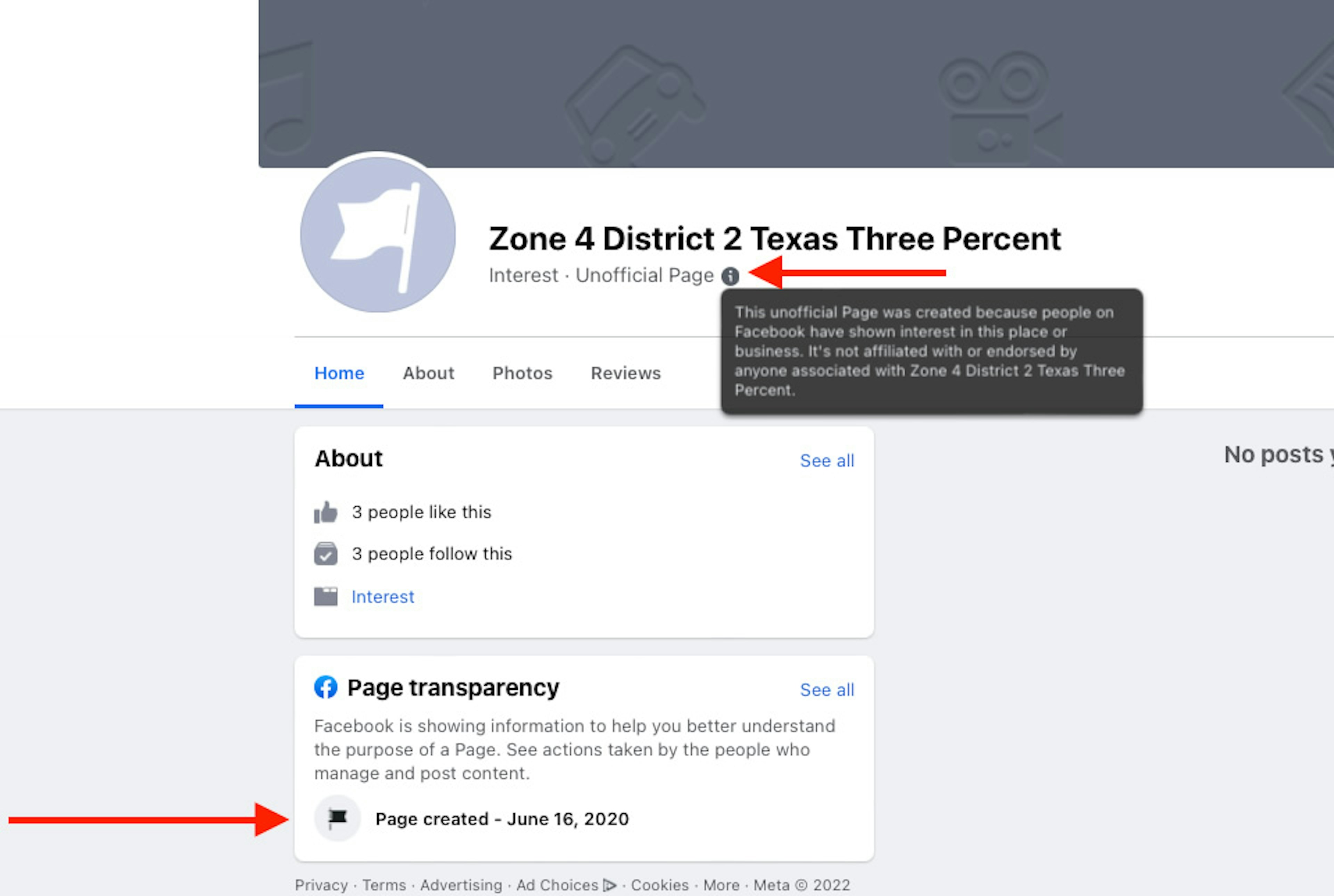

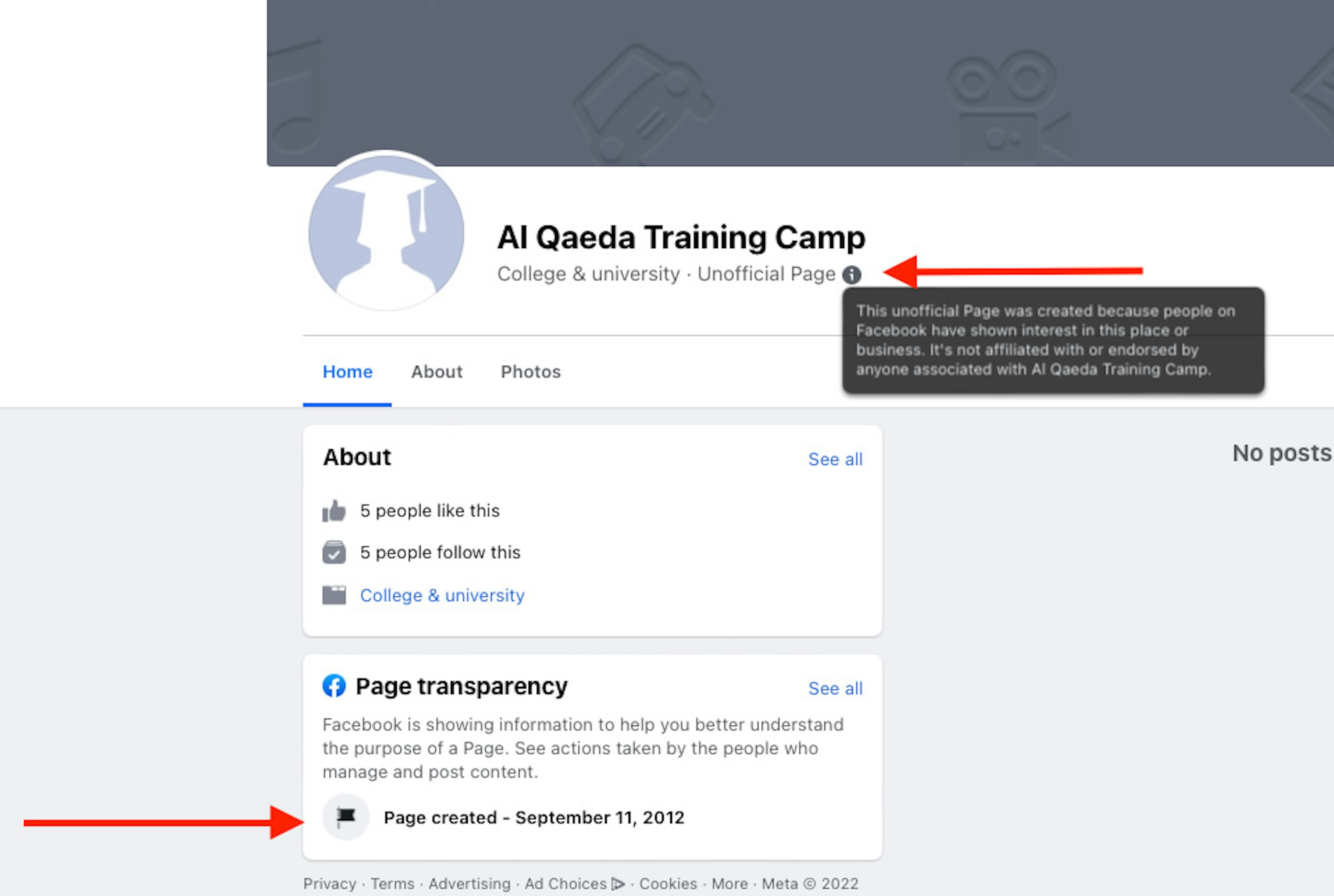

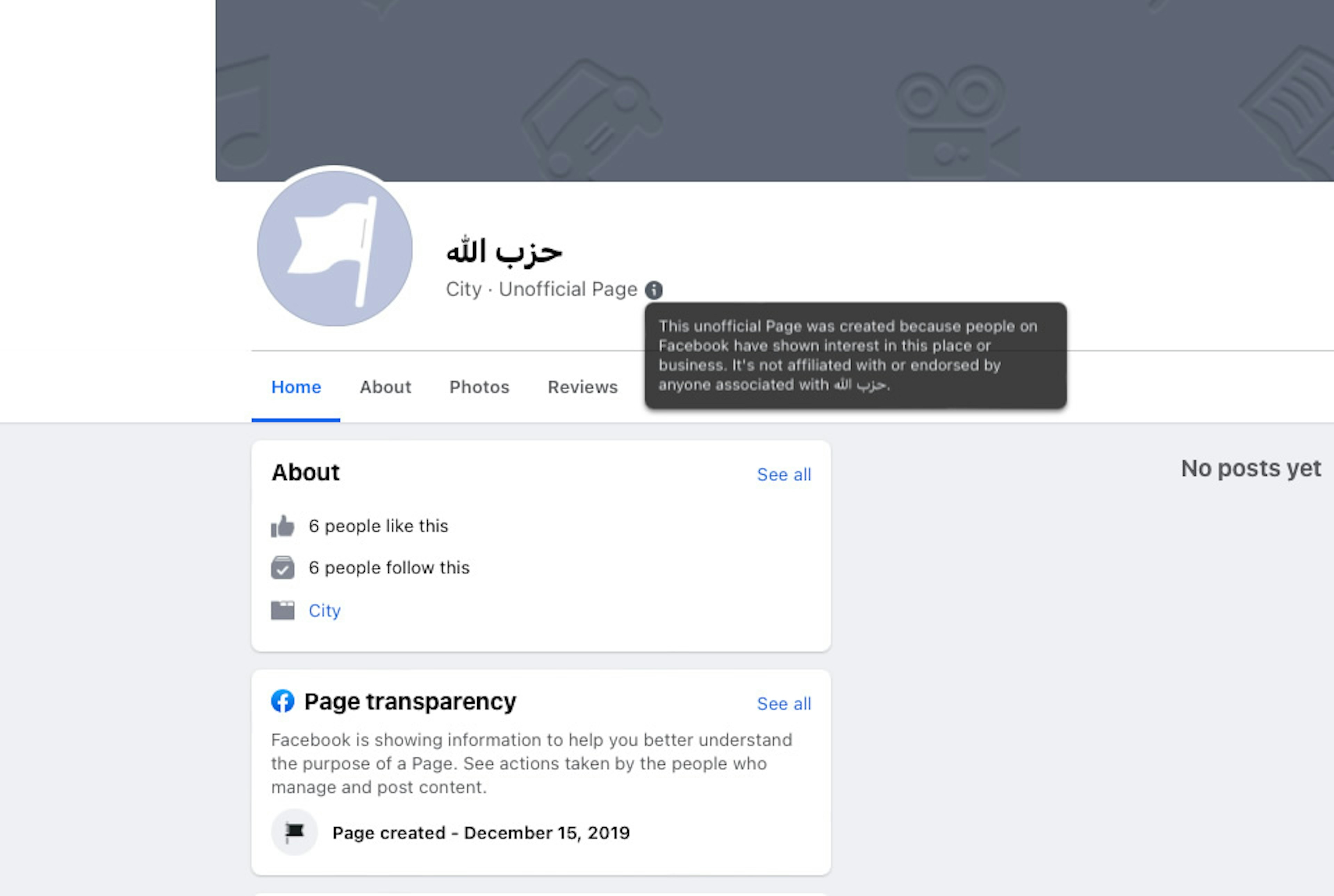

Facebook’s auto-generated pages are identifiable by a small “Unofficial Page” icon below the page name. Hovering over the icon brings up text that states that the page was auto-generated or created because people have “shown interest” in it.

The text identifying the auto-generated pages can vary, describing the pages as “unofficial” or “automatically generated.”

Does Facebook know it’s doing this?

Facebook has been aware for years that it is auto-generating pages for extremist groups. TTP has flagged the issue in multiple reports since 2020, and lawmakers have repeatedly pressed Facebook executives about it.

Facebook Chief Product Officer Chris Cox, the company’s lead on apps and technologies, was the latest to face questions on the issue at a Senate Homeland Security hearing this week.

At a November 2020 hearing, Sen. Dick Durbin (D-Ill.) pressed Facebook CEO Mark Zuckerberg about auto-generated and other white supremacist content on Facebook: “Are you looking the other way, Mr. Zuckerberg, in a potentially dangerous situation?”

And Rep. Max Rose (D-N.Y.) criticized Facebook for creating “Al Qaeda community groups” during a June 2019 hearing with Facebook’s then-head of global policy management, Monika Bickert.

It appears that Facebook was aware of the issue at the time of Rose’s questioning. In the hours before the hearing that featured Bickert’s testimony, the company removed many of the auto-generated pages cited in the whistleblower report.

How has Facebook responded?

Facebook only appears to take down auto-generated extremist pages when it faces critical media coverage or lawmaker questions. As far as TTP can tell, the company hasn’t modified its practice of auto-generating pages—including for extremist and terrorist groups.

That can create some disturbing results. For example, Facebook auto-generated a page for “Proud Boys USA” on January 6, 2021—the day of the attack on the U.S. Capitol. Facebook created this page despite the fact that it has banned the far-right Proud Boys since 2018. The former leader of the Proud Boys and other members of the group have been charged with seditious conspiracy in connection with the Capitol riot.

Facebook has auto-generated pages for other groups whose members have been charged in the Jan. 6 insurrection, including the Oath Keepers and Three Percenters.

TTP has also identified Facebook auto-generated pages for U.S.-designated terrorist groups in both English and Arabic.

One page titled “Al Qaeda Training Camp” was created on September 11, 2012—the anniversary of the 9/11 terror attacks. The U.S. designated al-Qaeda as a foreign terrorist organization in 1999.

Facebook bans terrorists, and said in 2018 that its detection technology specifically focused on al-Qaeda and its affiliates. But when TTP checked on Friday, the page was still active.

Facebook has created pages for other U.S.-designated foreign terrorist organizations including the Islamic State and Hezbollah.

Why this matters

By auto-generating these pages, Facebook is actively creating content for extremist organizations that are involved in real-world violence. The pages increase the groups’ visibility—and legitimacy—on the world’s largest social network.

These pages are not generated by Facebook users, but by Facebook itself. This is an important distinction.

For years, Facebook and other tech platforms have shielded themselves from liability over user-generated content by pointing to Section 230 of the 1996 Communications Decency Act. The Section 230 provision, which has been described as the 26 words that created the internet, states, “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” In layman’s terms, that means a company can’t be found liable for content it didn’t create.

But with auto-generated pages, Facebook is the publisher—and the protections afforded by Section 230 may not apply.

TTP will continue to monitor the company’s auto-generation feature. Take a deeper look into what our reports have found so far:

- May 21, 2020 – White Supremacist Groups are Thriving on Facebook

- March 24, 2021 – Facebook’s Militia Mess

- January 4, 2022 - A Year After Capitol Riot, Facebook Remains an Extremist Breeding Ground

- August 10, 2022 – Facebook Profits from White Supremacist Groups

- September 7, 2022 - Facebook Boogaloo Crackdown Falls Short as Extremists Return