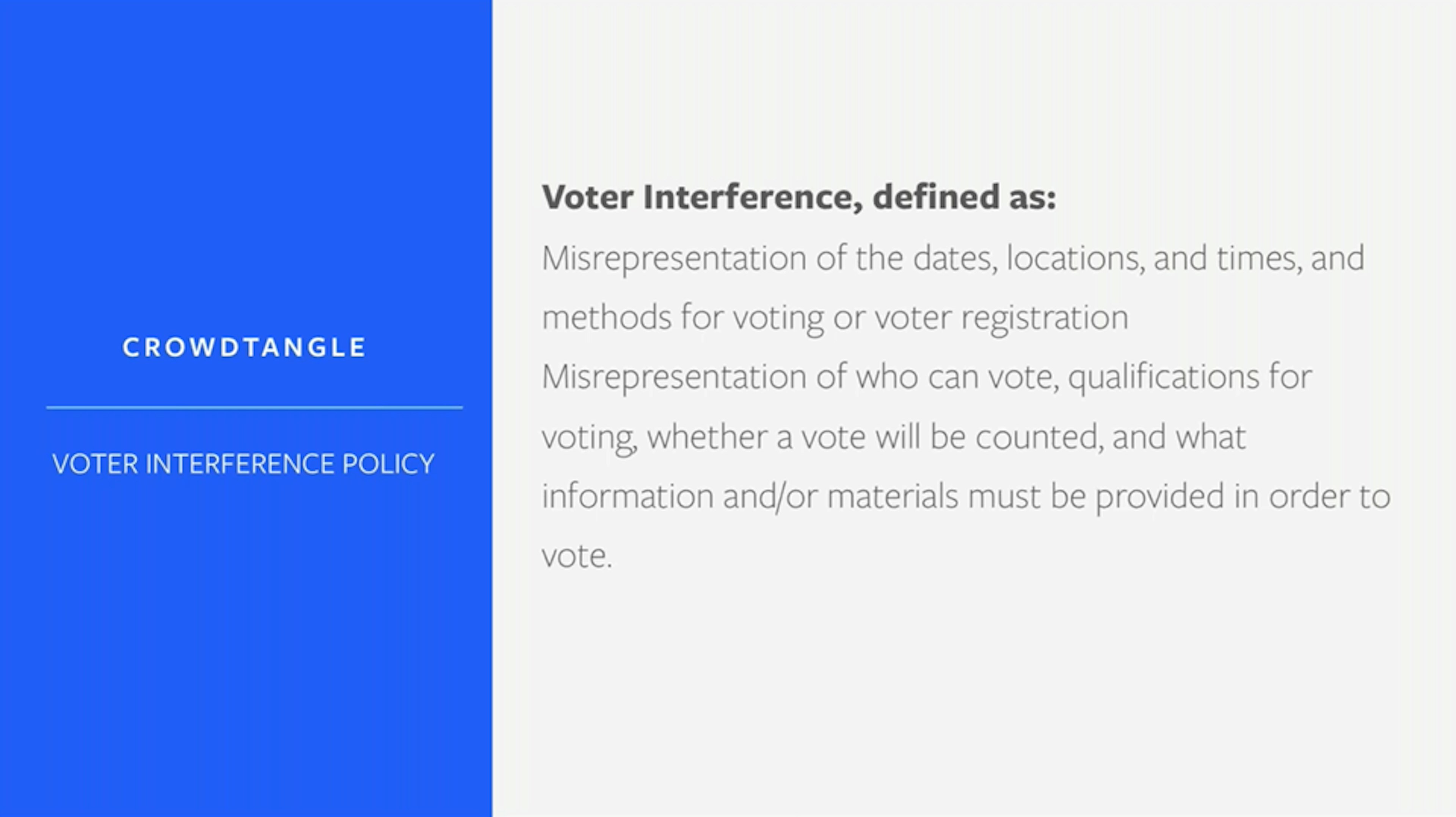

Facebook is asking state officials to monitor for voter interference on the platform, but the tracking tool it’s offering gives only a limited view of Facebook activity and doesn’t include some of the major pathways for disinformation, according to a Tech Transparency Project (TTP) investigation.

Starting last fall, Facebook representatives reached out to state officials, offering to customize dashboards for them on CrowdTangle, a Facebook-owned social media metrics tool, to “provide more transparency” around the 2020 election, according to emails obtained by TTP through open records requests. Facebook encouraged the officials to use the dashboards to identify and report instances of voter interference to the company.

But CrowdTangle has some significant blind spots that limit its ability to track election disinformation. The tool covers public Facebook groups and pages, but doesn’t include posts from most individual users or from private Facebook groups, which are known as hubs for conspiracy theories and hate speech. When one state official pointed out that election disinformation is often spread by individual users and asked if that data could be added to the dashboard, a Facebook rep rejected the idea, saying it couldn’t because of privacy concerns.

The emails suggest that Facebook, despite numerous announcements about its efforts to combat election interference in 2020, is attempting to pass some responsibility to state officials for identifying voting disinformation—part of a pattern in which the company puts the onus on others, including journalists and researchers, to flag bad content on the platform.

At the same time, Facebook isn’t providing states with all the data they need to spot disinformation, such as voter suppression efforts, ahead of the November election. A Facebook executive recently said CrowdTangle doesn’t represent “what most people see” on the platform, casting further doubt on the tool’s usefulness.

Threats to election integrity

Facebook reached out to state officials in October 2019 to offer customized “live display” dashboards for CrowdTangle, a tool that’s used to track how content spreads across social platforms. Khalid Pagan, a Facebook government and politics outreach manager, wrote that the company wanted to “provide more transparency” around elections and was “working closely with elections authorities from around the country.”

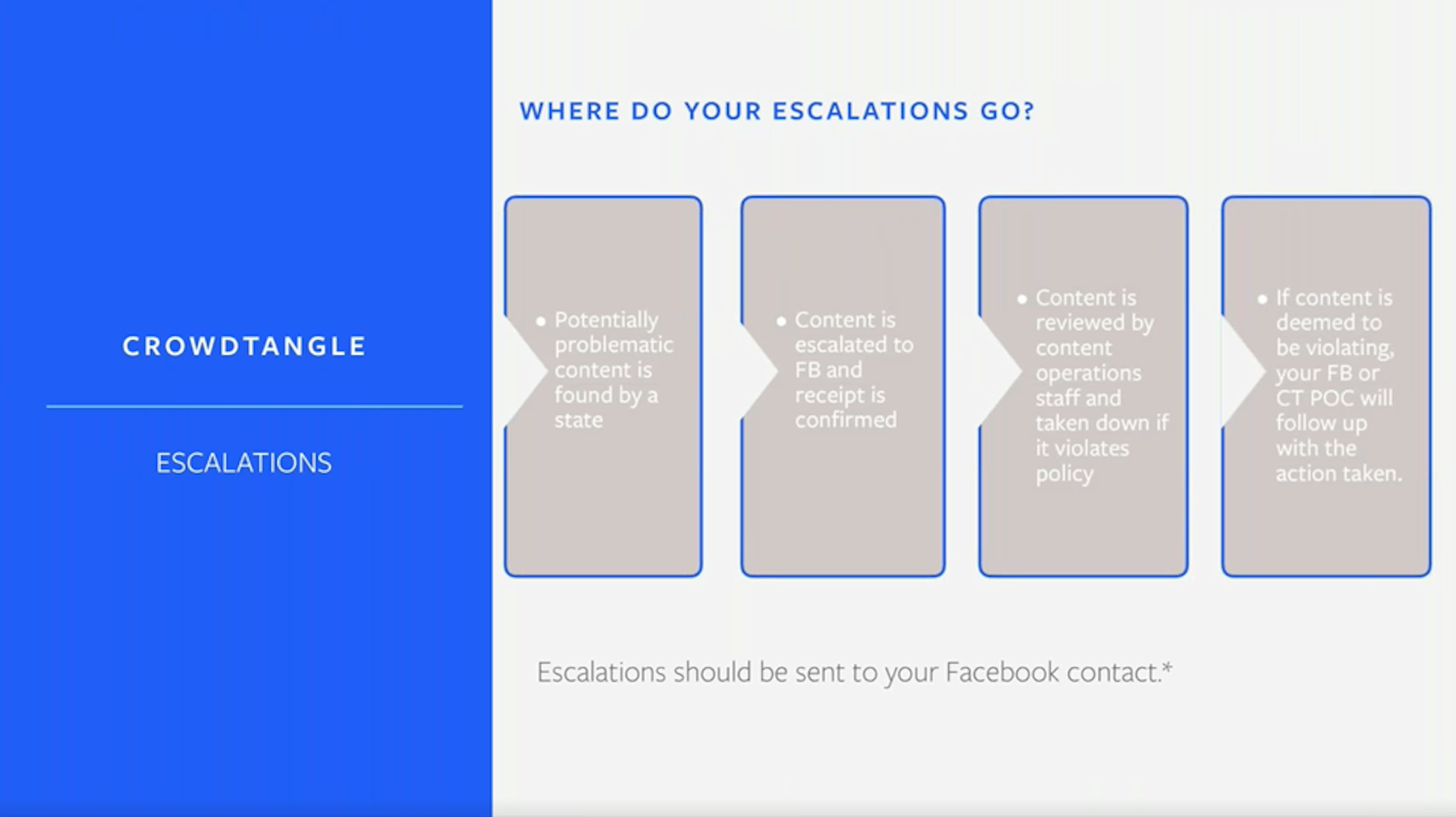

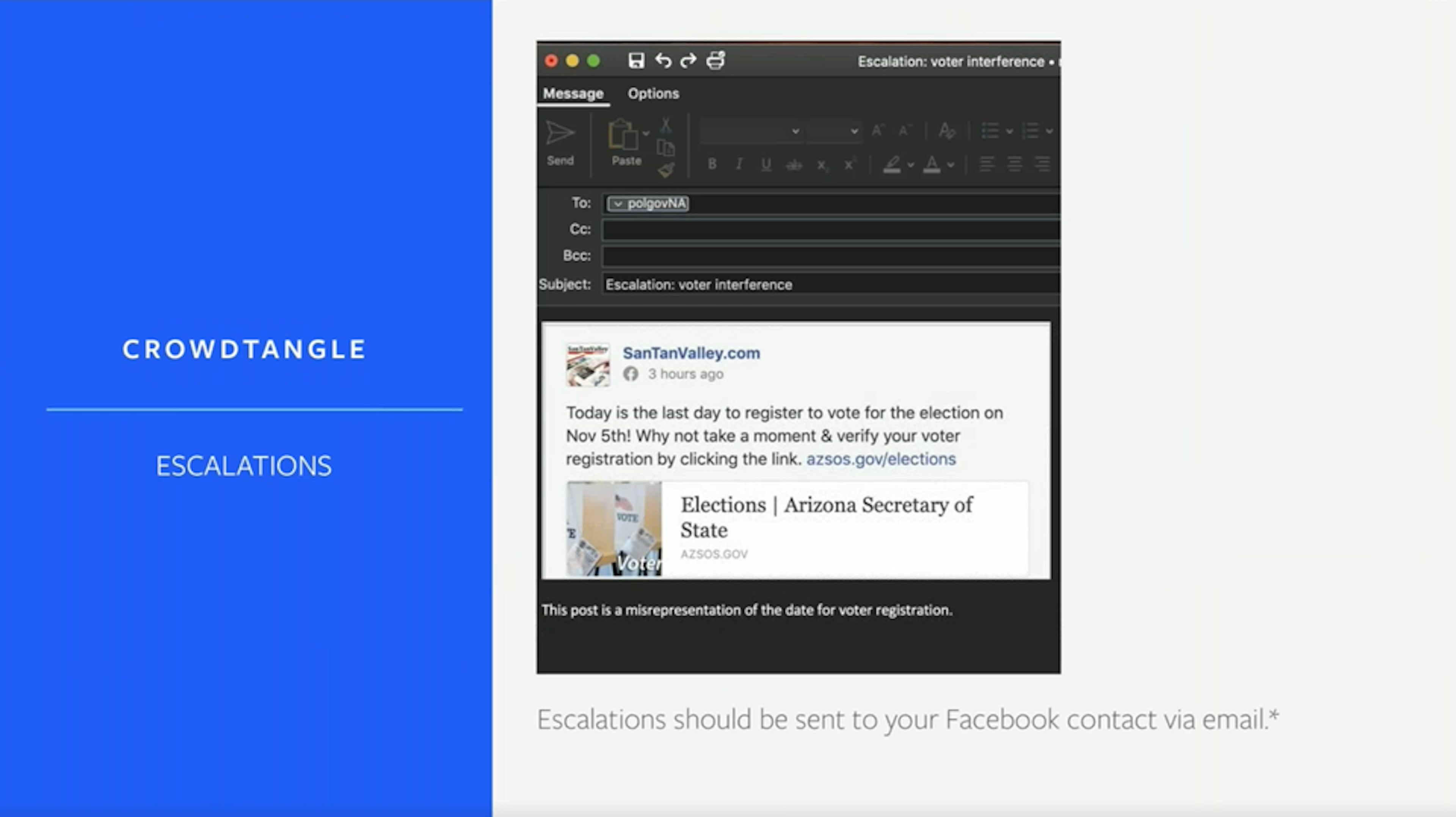

An accompanying training video spelled out how Facebook thought state officials should use the dashboards. “We want to leverage the on-the-ground knowledge that you have on threats to election integrity in your state,” Nichole Sessego, a representative of CrowdTangle, told state officials during the presentation.

“You guys know and have an ear to the ground on what’s happening,” another unidentified Facebook rep said during the presentation. “The minute you guys flag any sort of voter interference content to the team here at Facebook, we are immediately sending that to a review team and we are going to turn that around as quickly as humanly possible.”

But the limits of CrowdTangle’s data became apparent in a Facebook email exchange with one Vermont official. Eric Covey, the chief of staff for Vermont’s secretary of state, noted that much of the election disinformation on Facebook comes from individual users and asked, “Is there a reason why there isn’t a category for public posts from the general population?”

Pagan, the Facebook outreach manager, responded that such data can’t be included because of “privacy concerns” and said it would not be added as a feature.

Facebook representatives have continued to push CrowdTangle with state election officials this year, according to the emails obtained by TTP. In March 2020, Jannelle Watson, another member of Facebook’s politics and government outreach team, wrote to Colorado’s director of elections, Judd Choate, that “each state election authority will have their own CrowdTangle account” and offered additional trainings “to teach you how to leverage the full tool.”

Mixed messages

Facebook has promoted CrowdTangle—which it acquired in November 2016—to state officials in other ways.

In March 2020, Will Castleberry, Facebook’s vice president of U.S. state policy and community engagement, wrote to state attorneys general to tout the company’s efforts to combat Covid-19 disinformation and help people and businesses deal with the pandemic. Castleberry encouraged the AGs to use CrowdTangle to track "some of the most seen content about coronavirus.”

But Facebook’s heavy promotion of CrowdTangle to state officials was undermined just a few months later by comments from a Facebook executive who publicly questioned its utility.

In a July 20 Twitter exchange with New York Times reporter Kevin Roose, who’s been using CrowdTangle data to draw attention to how right-wing outlets dominate Facebook’s top-performing posts, John Hegeman, Facebook’s head of News Feed, argued that the CrowdTangle lists compiled by Roose “don’t represent what most people see on Facebook.”

Hegeman said a “more accurate” way to measure popularity is to look at links with the most reach, which he defined as impressions, the term for the number of times a post or ad is displayed on a screen. He tweeted a series of charts with this data, but said for now it was only “internally available” at Facebook.

That argument appears to undercut what Facebook has been telling state officials—that CrowdTangle is an effective way to track the “most seen content” on the platform.

Major blind spots

CrowdTangle says its data does not include individual Facebook users, except for a “very small subset” of verified profiles that includes politicians, celebrities and other high-profile people. But individual users are among the major drivers of election disinformation on the platform. If the user’s profile is public, their posts can be seen by anyone on the platform, making it easy for the content to spread widely.

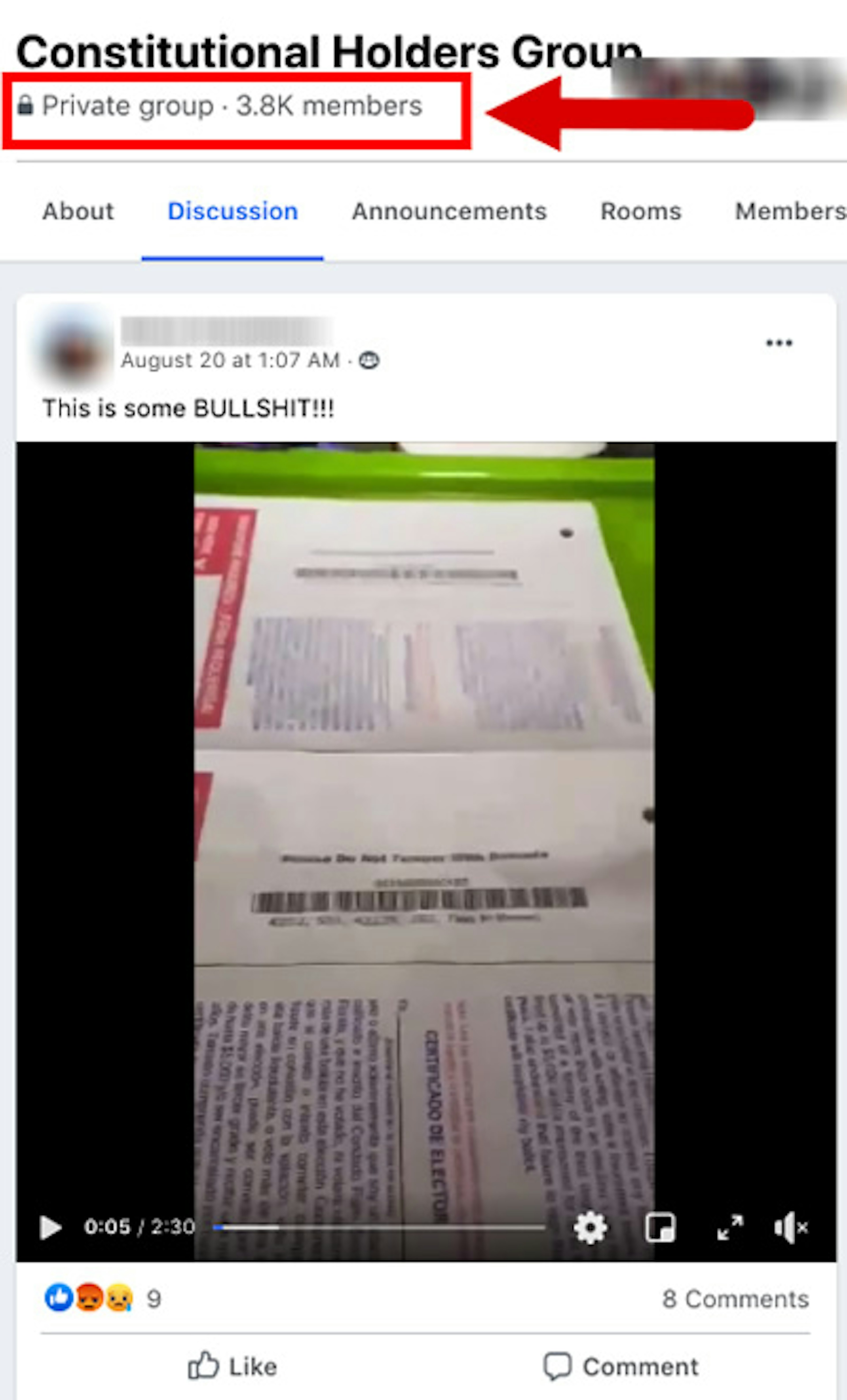

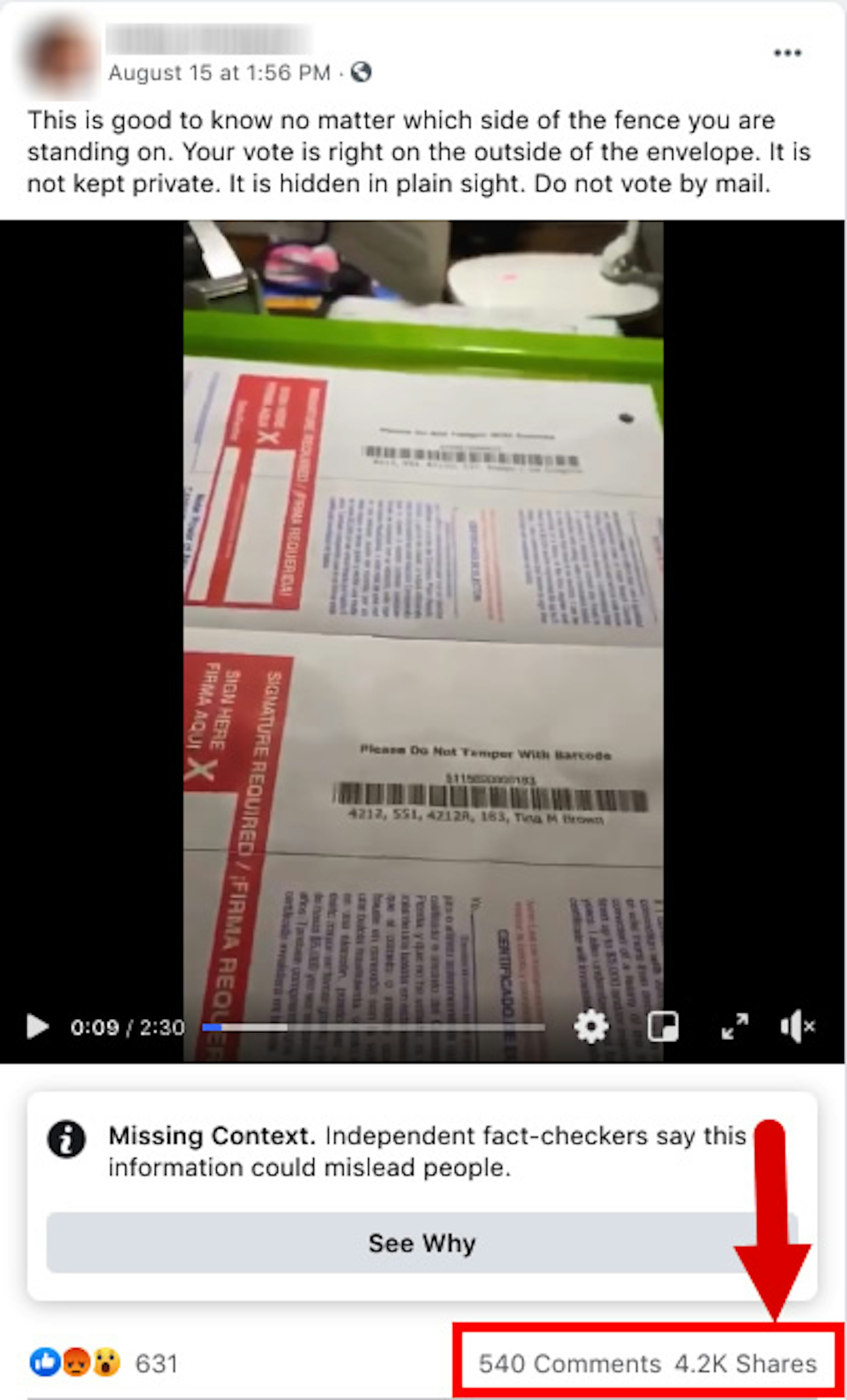

For example, one Facebook user’s post went viral in August with a false video claiming that Florida’s mail-in ballots show party affiliation, allowing postal workers throw them out on a partisan basis. (The video shows primary, not general election, ballots, making the claim misleading, according to fact checkers.) The post has been shared more than 4,200 times and carries a “missing context” label for users logged into Facebook, but has not been removed. Meanwhile, other Facebook users have shared the same video from YouTube.

Private Facebook groups, which are missing from CrowdTangle as well, are also significant vectors for disinformation. Such groups are known to spread conspiracy theories and misinformation, and they’re not heavily moderated by Facebook.

TTP found the same misleading mail-in ballot video circulating in private Facebook groups, but Facebook didn’t consistently apply fact check labels to them.

Facebook’s recently announced steps to combat election interference, such as barring new political ads the week before Election Day, don’t address the spread of disinformation in private groups or by individual users. A company announcement on Sept. 17 about its efforts to limit the spread of misinformation and harmful content in Facebook groups didn't mention elections.

States in the spotlight

Facebook is putting a heavy emphasis on its work with state officials as it highlights its efforts to combat interference with the 2020 vote.

CEO Mark Zuckerberg said Sept. 3 that Facebook is partnering with state election authorities to “identify and remove false claims about polling conditions,” adding the collaboration would continue “through the election until we have a clear result.”

“We've already consulted with state election officials on whether certain voting claims are accurate,” Zuckerberg said.

But as TTP’s investigation shows, Facebook is both leaning on state officials to flag election disinformation and not giving those same officials the data they need to spot the full range of problematic content.

At the same time, Facebook is sending mixed messages about CrowdTangle—publicly questioning its utility while privately assuring state officials of its value—raising questions about the company’s work with the states on preventing voter interference.