Facebook is failing to remove white supremacist groups and is often profiting from searches for them on its platform, according to a new investigation by the Tech Transparency Project (TTP) that shows how the social network fosters and benefits from domestic extremism.

In response to a civil rights audit that was highly critical of Facebook’s approach to racial justice issues, Facebook said last November that it had taken steps to ban “organized hate groups, including white supremacist organizations, from the platform.”

But TTP found that more than 80 white supremacist groups have a presence on Facebook, including some that Facebook itself has labelled as “dangerous organizations.” What’s more, when our test user searched for the names of white supremacist groups on Facebook, the search results were often monetized with ads—meaning Facebook is profiting off them.

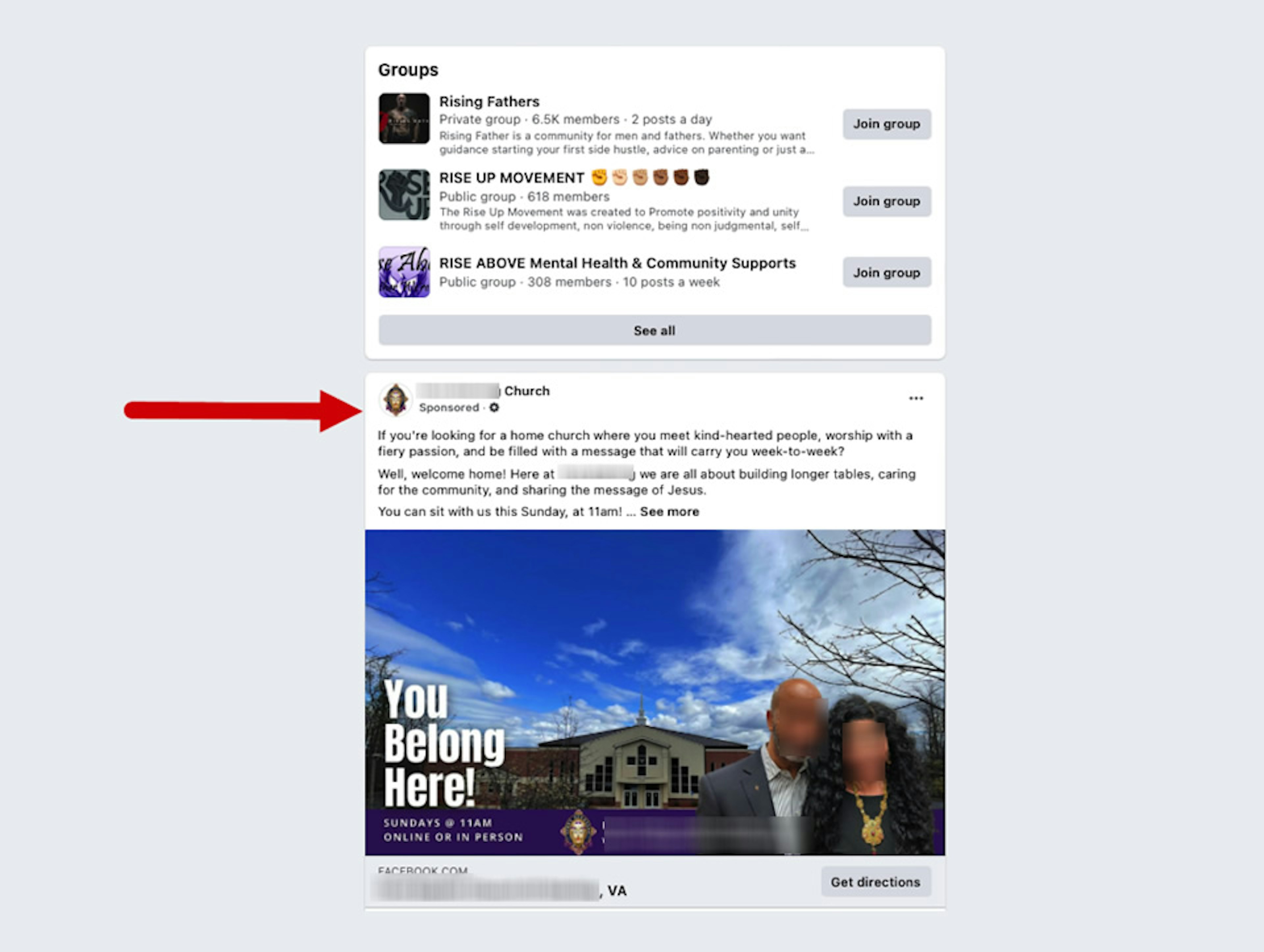

Even more disturbing: TTP discovered that Facebook searches for some groups with “Ku Klux Klan” in their name generated ads for Black churches, highlighting minority institutions to a user searching for white supremacist content.

That’s a chilling result, given reports that the gunman who killed 10 people in the recent, racially motivated mass shooting in Buffalo, New York, did research to pick a target neighborhood with a high ratio of Black residents.

The investigation also found that despite years of warnings that Facebook’s algorithmic tools are pushing users toward extremism, the platform continues to auto-generate Pages for white supremacist organizations and direct users who visit white supremacist Pages to other extremist content. On top of that, Facebook is largely failing in its effort to re-direct users searching for hate terms to websites promoting tolerance.

These findings—which echo a previous TTP report from May 2020—underscore Facebook’s inability, or unwillingness, to remove white supremacists from its platform despite the obvious dangers they pose to U.S. society. It’s another example of the often enormous gap between what Facebook tells the public it’s doing and what’s actually happening on its platform.

For this study, TTP conducted searches on Facebook for the names of 226 white supremacist organizations that have been designated as hate groups by the Southern Poverty Law Center (SPLC), the Anti-Defamation League (ADL), and Facebook itself. The Facebook-designated groups came from an internal company blacklist of “dangerous individuals and organizations” published by The Intercept in October 2021.

The analysis found:

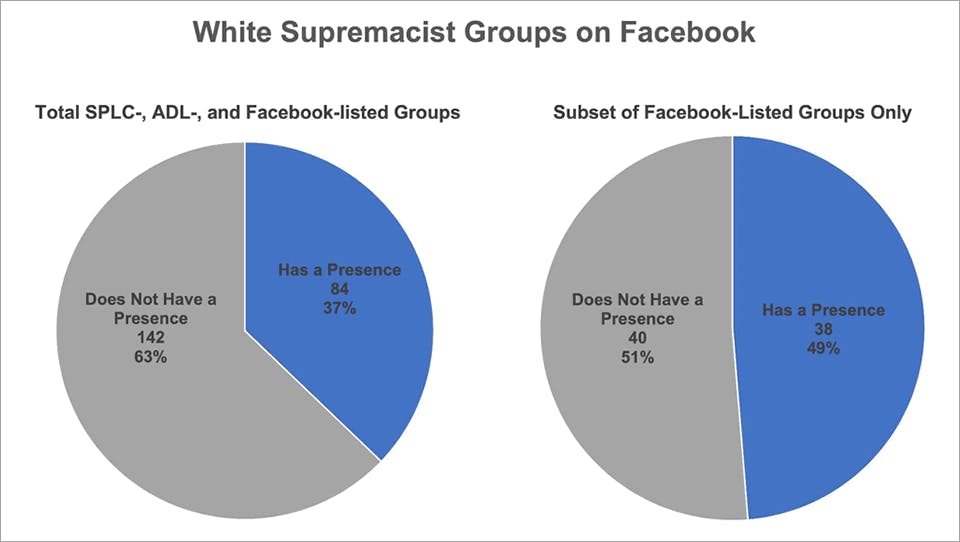

- More than a third (37%) of the 226 white supremacist groups had a presence on Facebook. These organizations are associated with a total of 119 Facebook Pages and 20 Facebook Groups.

- Facebook often monetized searches for these groups, even when their names were clearly associated with white supremacy, like “American Defense Skinheads.” Facebook also monetized searches for groups on its own “dangerous organizations” list.

- Searches for some white supremacist groups, including those with “Ku Klux Klan” in their names, showed advertisements for Black churches, raising concerns that Facebook is highlighting potential targets for extremists.

- The white supremacist Pages identified by TTP included 24 that were created by Facebook itself. Facebook auto-generated them as business Pages when someone listed a white supremacist group as an interest or employer in their profile.

- Facebook’s algorithmic recommendations often directed users visiting white supremacist Pages to other extremist or hateful content.

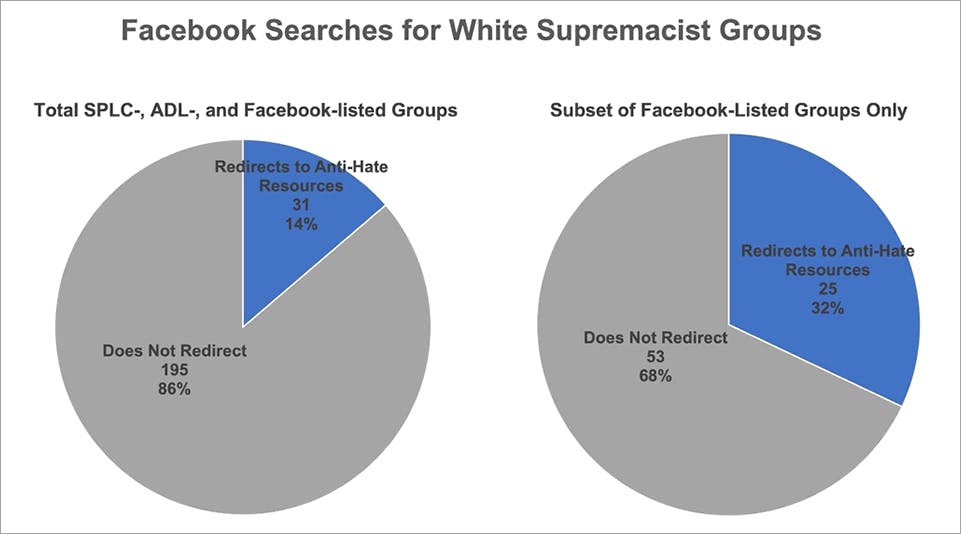

- One of Facebook’s strategies for combatting extremism—redirecting users who search for terms associated with hate groups to organizations that promote tolerance—only worked in 14% of the 226 searches for white supremacist organizations.

TTP created a visualization to illustrate how Facebook’s Related Pages connect white supremacist groups with each other and with other hateful content.1 Hover your mouse over any node to show details or use the drop-down menu to highlight a specific group in our dataset.

‘Dangerous Organizations’

TTP identified the 226 white supremacy groups used in this investigation via the Anti-Defamation League’s (ADL) Hate Symbols Database; the Southern Poverty Law Center’s (SPLC) 2021 Hate Map, an annual census of hate groups operating in the U.S.; and Facebook’s internal list of “Dangerous Individuals and Organizations,” which was published by The Intercept in October 2021. (See more on the methodology at the bottom of this report.)

The investigation found that 37% (84) of the 226 organizations had a presence on Facebook as of June 2022. Of the 84, 45% (38) had two or more associated Pages or Groups on Facebook, resulting in a total of 119 individual Pages and 20 Groups.

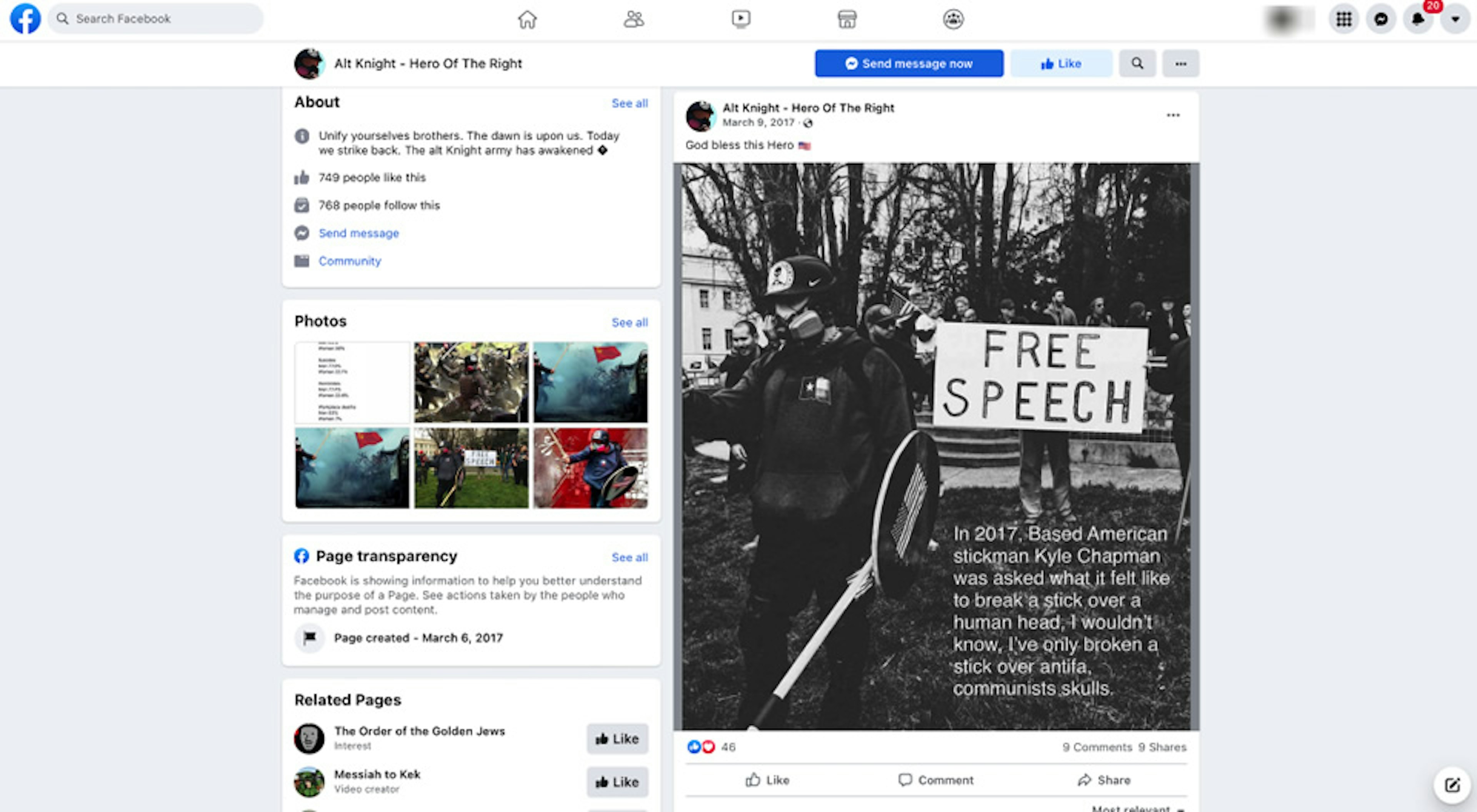

Examples include a Facebook Page associated with the Fraternal Order of Alt-Knights, a paramilitary arm of the far-right Proud Boys. The Page valorizes the Alt-Knights’ founder, white nationalist Kyle Chapman, who’s known for attacking a counter-protestor at a Trump rally in 2017. The Fraternal Order of Alt-Knights is on Facebook’s banned list of dangerous organizations.

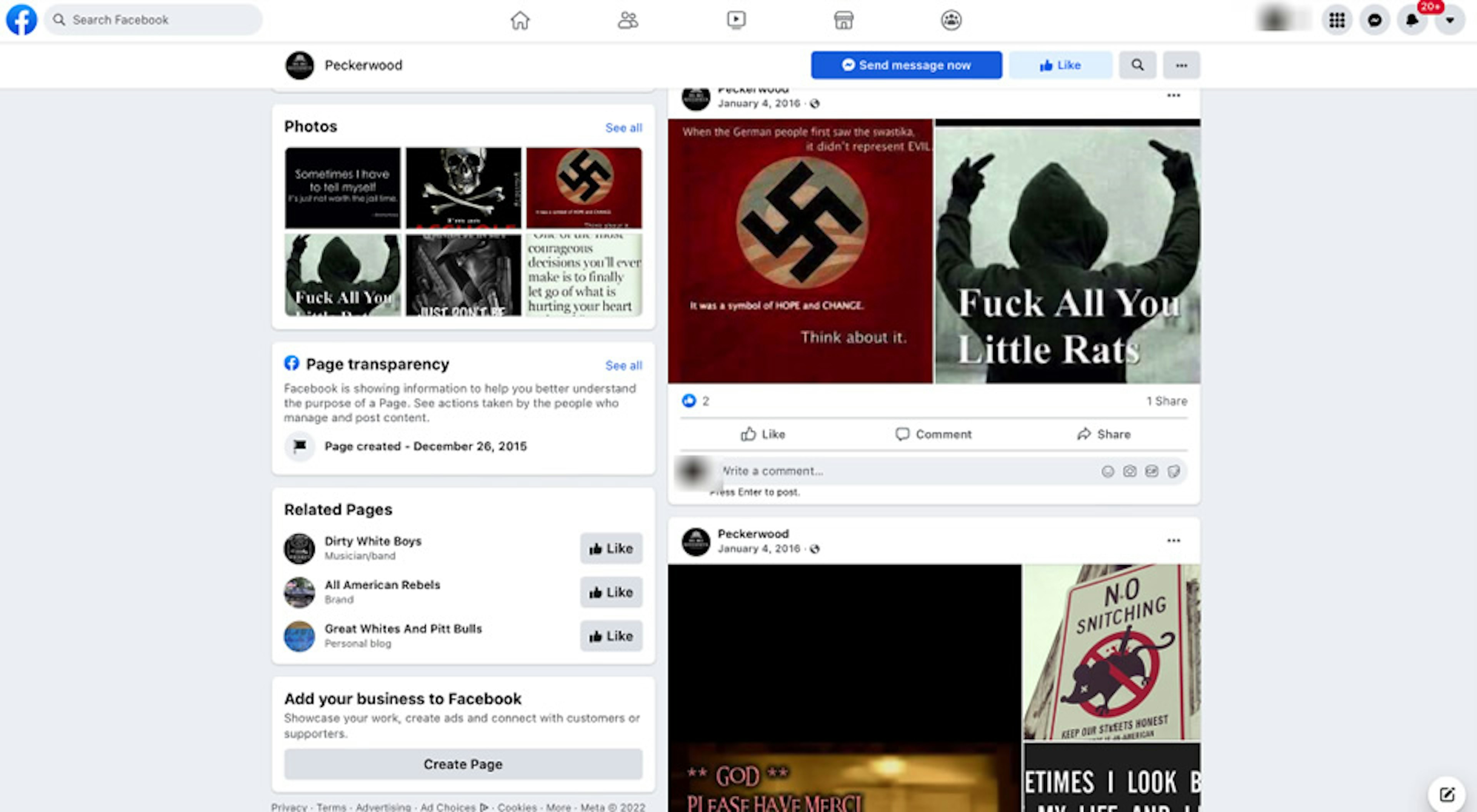

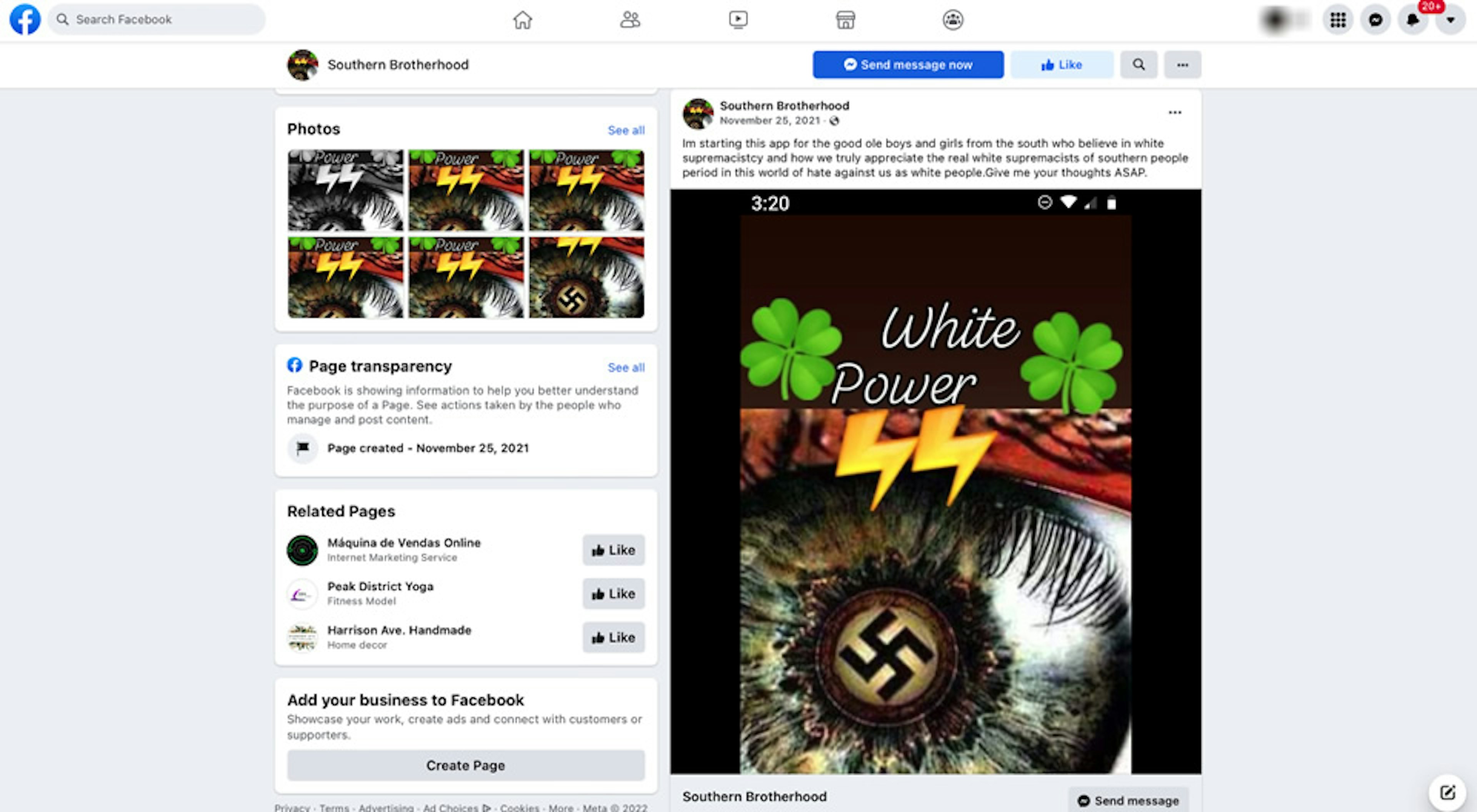

The white supremacist prison gang Peckerwood Midwest also has a presence on Facebook. One Peckerwood Page has been active since December 2015 and features images of a swastika along with anti-Semitic memes. A Facebook Page for the Southern Brotherhood, another white supremacist gang, shares similar content. The Page shows swastika images and a profile photo with the words “white power.”

Facebook has struggled with white supremacy despite banning such content for years.

The company scrambled to remove racist content following the August 2017 “Unite the Right” rally in Charlottesville, Virginia, which was organized using a Facebook event Page. The rally, which brought together white supremacist groups from across the country, turned violent and left one woman dead.

Facebook later banned praise and support for white nationalism and white separatism after the March 2019 massacre of 51 people at a pair of mosques in Christchurch, New Zealand—an event livestreamed by the shooter on Facebook.

Facebook executives have assured Congress that the company is taking action on the issue. At an October 2020 Senate hearing, CEO Mark Zuckerberg said, “If people are searching for—I think for example white supremacist organizations, which we banned those, we treat them as terrorist organizations—not only are we not going to show that content but I think we try to, where we can, highlight information that would be helpful.”

But TTP’s findings show that Facebook continues to allow dozens of white supremacist organizations to use its platform to organize and draw attention to their cause.

TTP first reported on the presence of white supremacist organizations on Facebook in May 2020, using groups identified by the Southern Poverty Law Center and Anti-Defamation League. Responding to the findings of that report, Facebook told HuffPost that it has its own process for determining which organizations are hate groups and may not always agree with the designations made by outside groups like the SPLC and ADL. As a result of that statement, TTP in this follow-up report expressly included organizations that Facebook itself has identified as hate groups on its “dangerous organizations” list.

As noted above, 37% of the full set of 226 white supremacist groups—designated by SPLC, ADL, and Facebook—have a presence on Facebook. When TTP examined just those organizations designated by Facebook itself, Facebook did worse: Nearly half—49% (38)—were present on the platform. Those groups together generated 57 Facebook Pages and 10 Groups.

Monetizing searches for white supremacy

Facebook announced in October 2019 an option for ads to appear in search results on the platform, and the company’s promotional materials assure advertisers that search ads “will both respect the audience targeting of the campaign and be contextually relevant to a limited set of English and Spanish search terms.”

As part of our investigation into white supremacist groups, we searched Facebook for each of the 226 organizations in our dataset and recorded whether the searches were monetized with ads. The results were troubling: Facebook served ads in more than 40% (91) of the searches for white supremacist groups.

The ads appearing in these white supremacist searches ranged from the Coast Guard Foundation to social shopping marketplace Poshmark to Walmart—brands that likely don’t want to be associated with white supremacy.

When TTP isolated the searches to just the groups from Facebook’s list of dangerous organizations, the rate of monetized searches was similar—39%.

Facebook does not disclose the “limited set” of search terms that produce “contextually relevant” ads. The names of some white supremacist groups in TTP’s data set include basic words like “national,” which could trigger Facebook search ads.

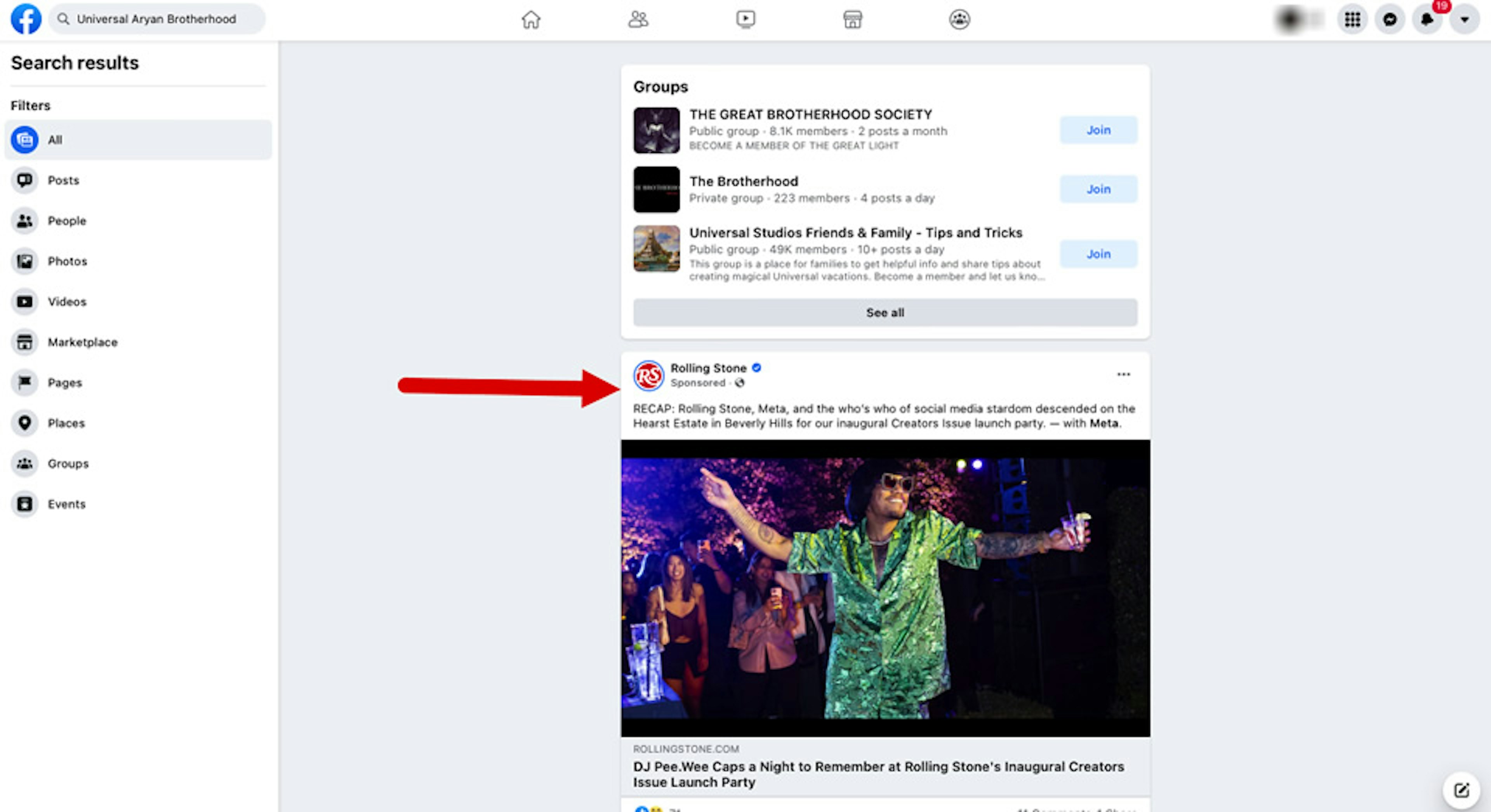

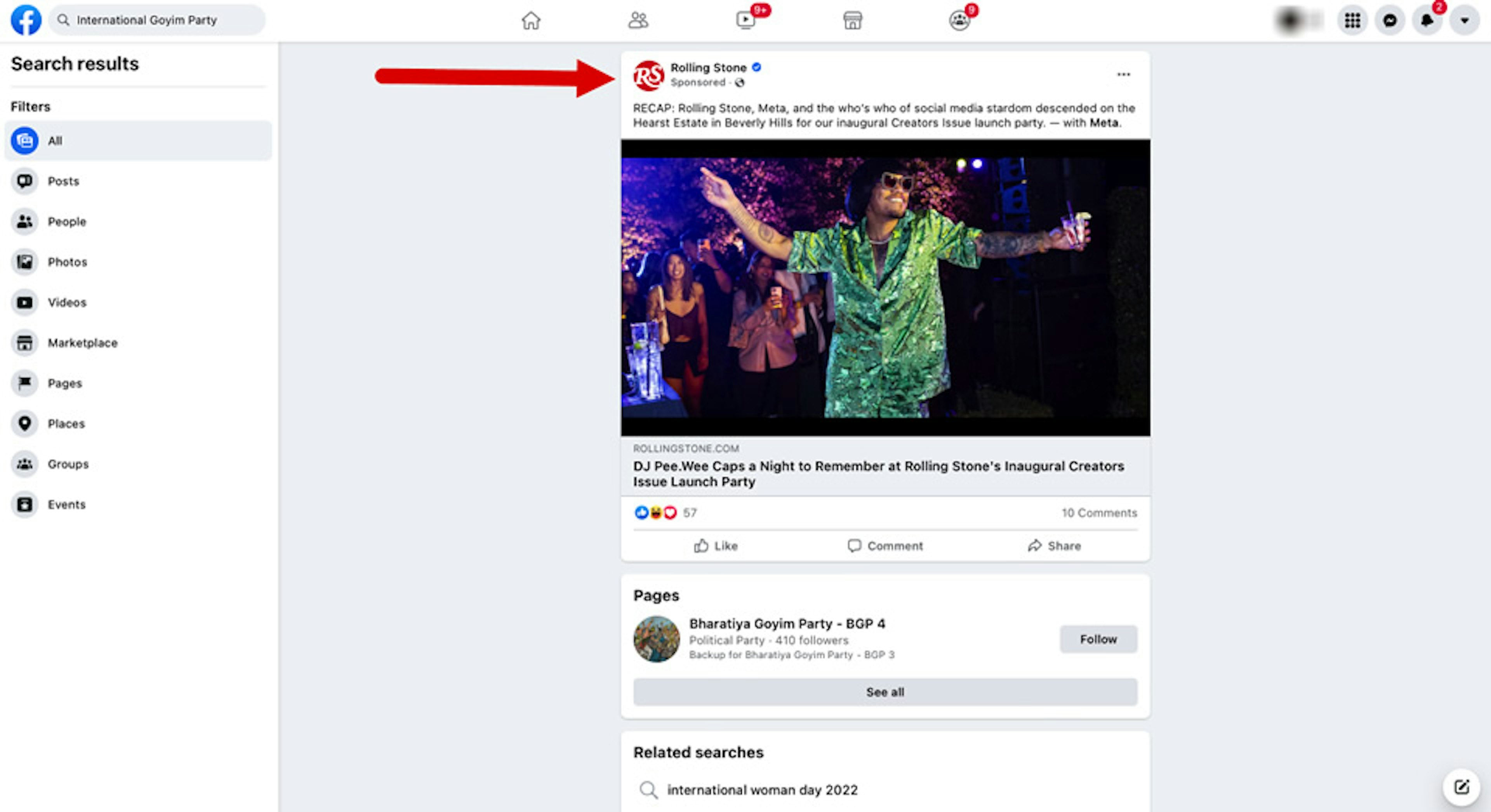

But in some cases, Facebook served ads into searches for groups whose names showed clear connections to white supremacist ideology. For example, Facebook put a Rolling Stone ad in a search for the Universal Aryan Brotherhood, a white supremacist prison gang.

The same ad appeared in a search for the International Goyim Party, a hate group that’s on Facebook’s banned list of dangerous organizations. (The ad, which publicized a Rolling Stone creators issue launch party, actually tagged Facebook parent company Meta.)

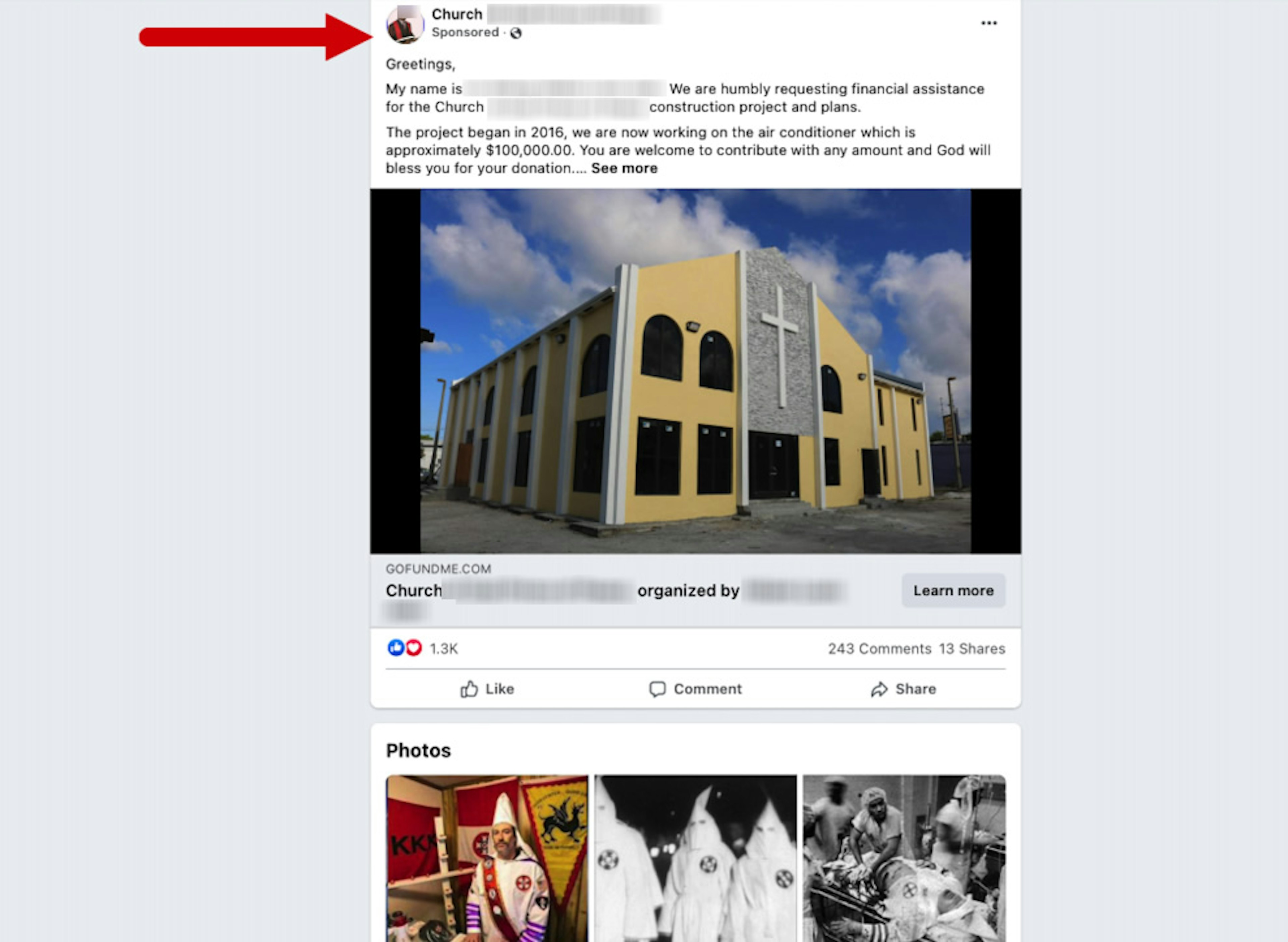

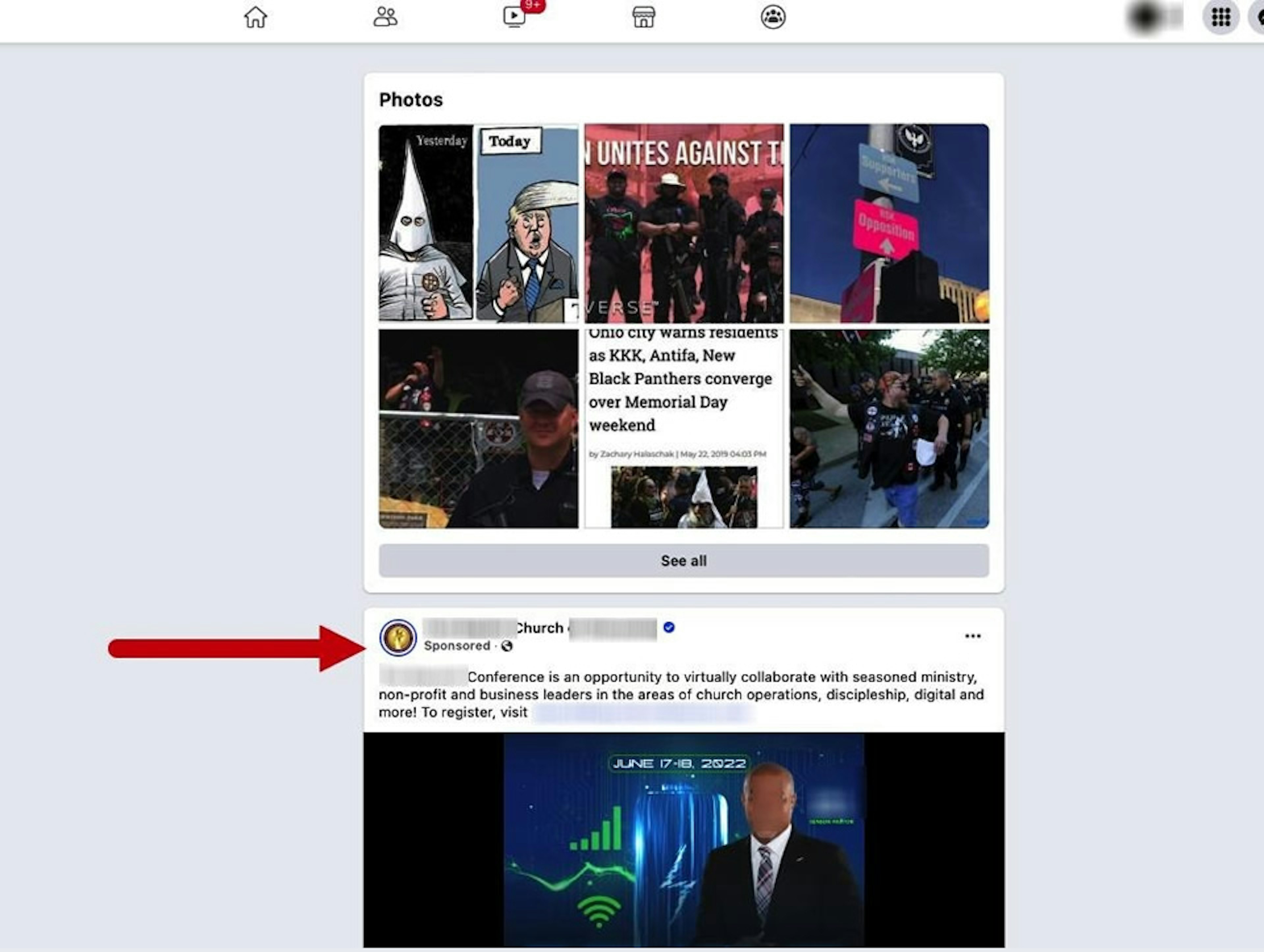

TTP even found instances of Facebook running ads for Black churches, a Black-owned business, and even a Juneteenth promotion in search results for white supremacist groups, including some that had the phrase “Ku Klux Klan” in their name.

For example, a Facebook search for “Honorable Sacred Knights of the Ku Klux Klan,” an SPLC-designated white supremacist group, was monetized with an ad for a conference held by a predominantly black church in southern Maryland. TTP is not naming the churches in the ads to protect the safety of the churches and their congregations.

Meanwhile, a search for “Church of the National Knights of the Ku Klux Klan” was monetized with an ad raising construction funds for a Haitian church in Miami, Florida.

Another search for the “Rise Above Movement,” a southern California white supremacist organization designated as a hate group by ADL, SPLC, and Facebook itself, surfaced ads for a historically black church in northern Virginia. Multiple members of the Rise Above movement received prison sentences for their role in the violence at the “Unite the Right” rally in Charlottesville.

These ad placements raise questions about how Facebook defines “contextually relevant” search ads. They also raise concerns that Facebook is serving up potential targets to individuals who are looking to connect with white supremacist groups on the platform.

The U.S. has seen a string of racially motivated mass shootings in recent years. In 2015, white supremacist Dylann Roof killed nine Black members of a Charleston, South Carolina, church in a racially motivated attack. More recently, a shooter in Buffalo researched Black communities online to identify targets before killing 10 at a supermarket, according to officials.

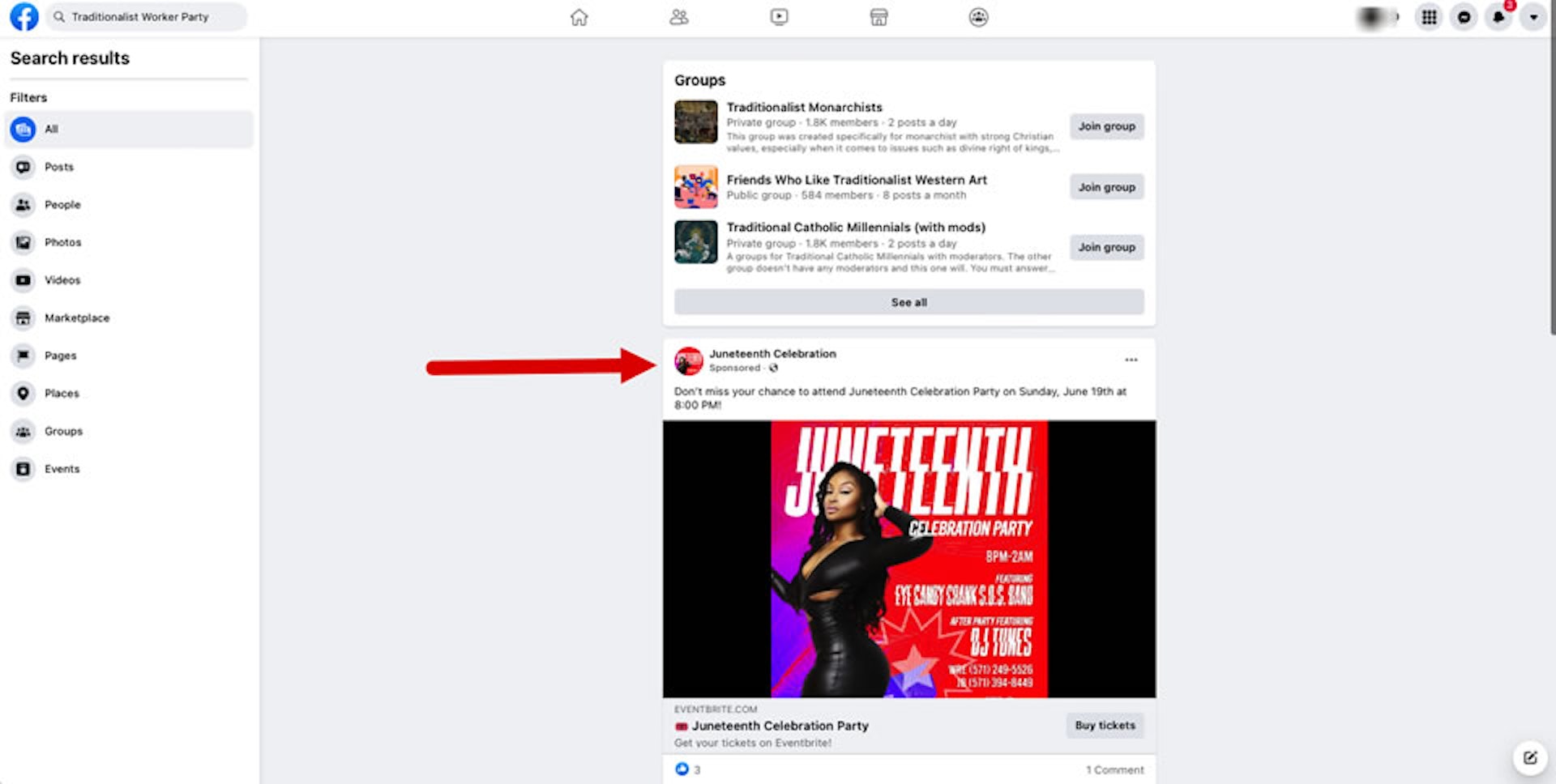

TTP also found that a Facebook search for the “Traditionalist Worker Party”—a neo-Nazi group that advocated for racially pure nations, according to the SPLC—surfaced ads for a Juneteenth celebration marking the end of slavery in the U.S. The Traditionalist Worker Party is on Facebook’s own list of dangerous organizations, and its leader was among the dozens charged for conspiracy to commit racially motivated violence at the Unite the Right rally.

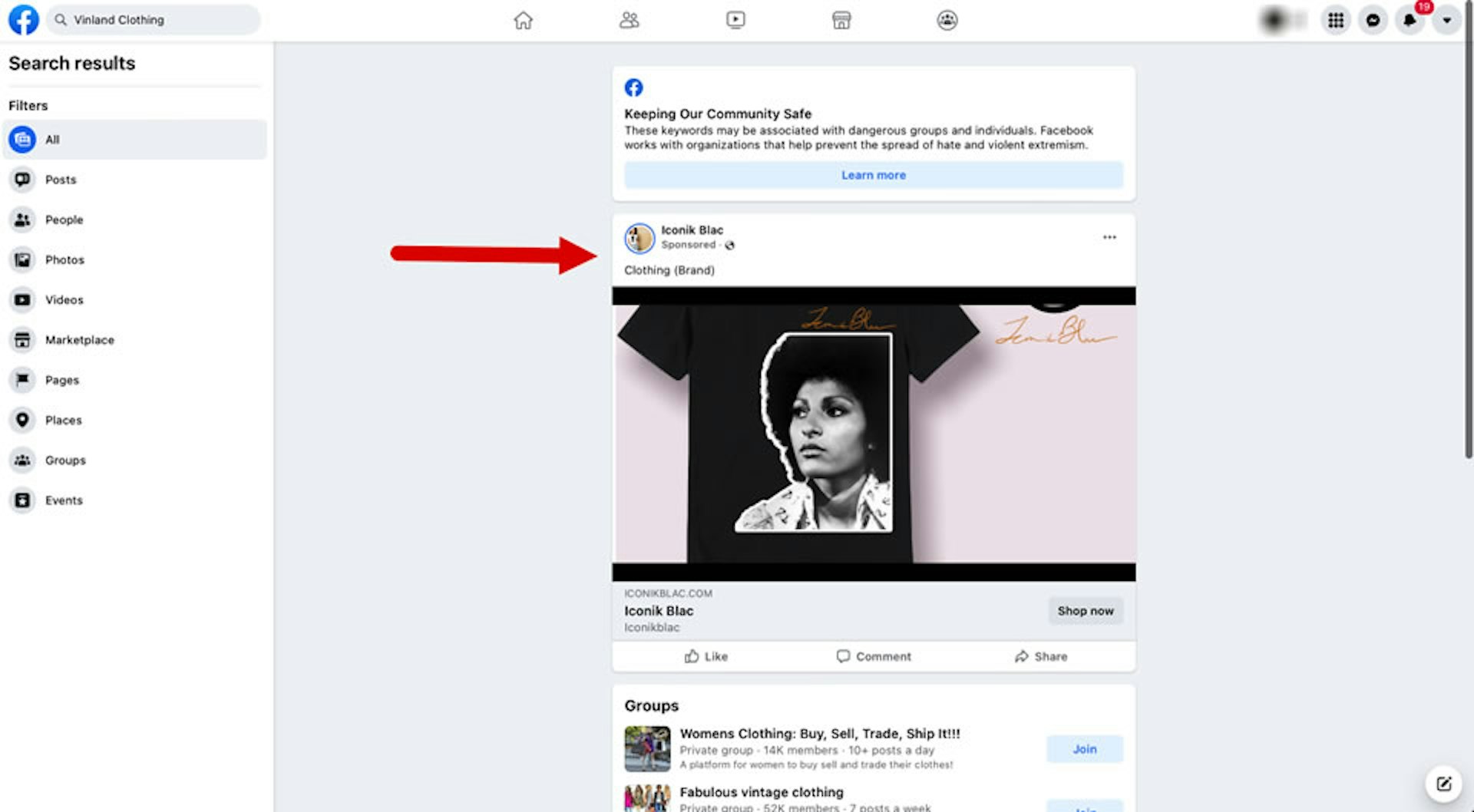

In another case, Facebook monetized a search for “Vinland Clothing,” a California-based racist skinhead group listed by both the SPLC and Facebook. Facebook flashed a message on the first page of search results for this group, warning, “These keywords may be associated with dangerous groups and individuals,” and pointing the user to anti-hate organizations. But Facebook still served up ads on the search—including one for a Black-owned clothing company called Iconik Blac that offers T-shirts of “black icons and cultural figures.”

It's clear from this example that Facebook considered the search term “Vinland Clothing” to be dangerous enough to issue a warning to users, but the company allowed the search to be monetized with ads anyway.

Facebook is not alone in its monetization of searches for hate groups. TTP also found that Google served ads on some searches for the same set of 226 white supremacist organizations—though at a much lower rate of 12% (27). Facebook’s rate of monetization was more than three times higher.

Facebook is still creating Pages for hate groups

Facebook isn’t just a passive host for white supremacist groups. In fact, the platform is often creating content for them.

Of the 119 Facebook Pages for white supremacist groups identified in this investigation, 20% (24) were auto-generated by Facebook itself. More than half of those auto-generated Pages were for groups that appear on Facebook’s own dangerous organizations list.

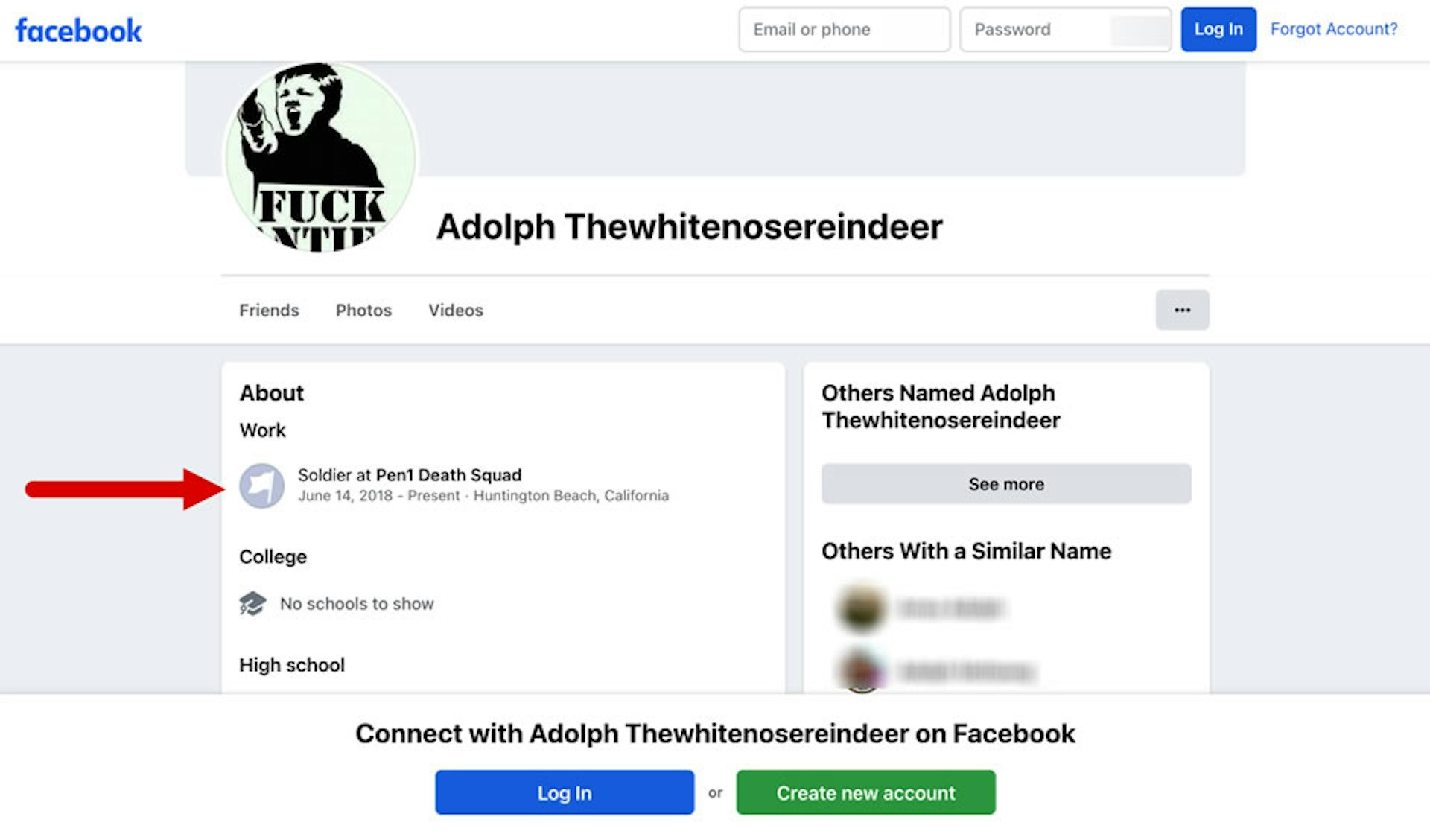

Facebook automatically creates these Pages when a user lists a job, interest, or business in their profile that does not have an existing Page. For example, when a user going by the name “Adolph Thewhitenosereindeer” listed their work position as “soldier” at “Pen1 Death Squad,” Facebook automatically generated a Page for that hate group. Pen1 Death Squad is shorthand for “Public Enemy Number 1,” an ADL-designated white supremacist gang.

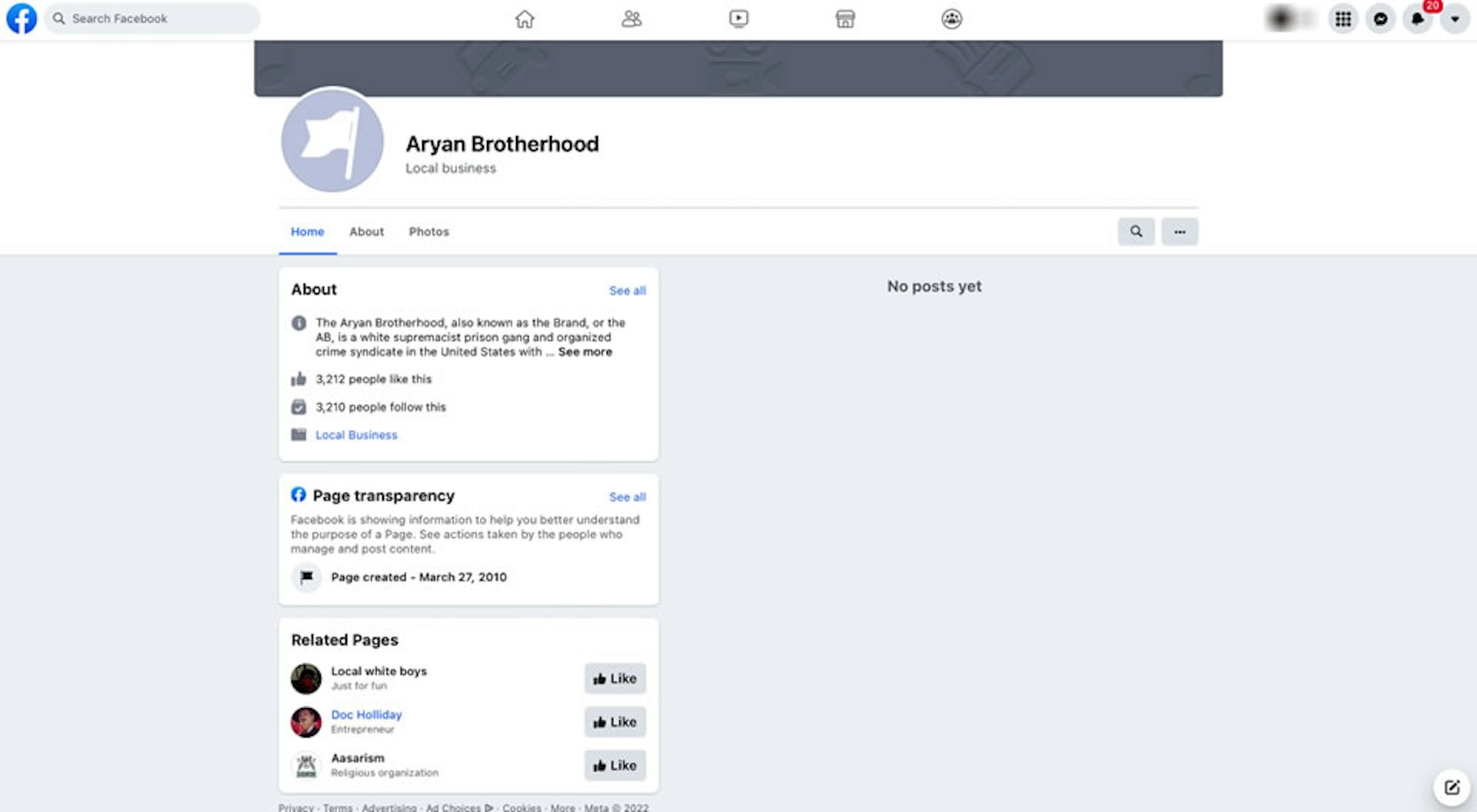

This has been a longstanding problem for Facebook. Some Facebook-generated white supremacist Pages have been around for years, amassing thousands of “likes.” One of the auto-generated Pages with the most “likes” in TTP’s analysis was for the Aryan Brotherhood, America’s oldest major white supremacist prison gang. The auto-generated Aryan Brotherhood Page was created on March 27, 2010. In the more than 12 years the Page has been active, it has racked up over 3,200 “likes.”

TTP and other organizations have repeatedly raised the alarm about Facebook’s auto-generation of Pages for extremist groups. The issue first came to light in April 2019 when an anonymous whistleblower filed a Securities and Exchange Commission (SEC) petition highlighting Facebook’s practice of auto-generating business Pages for terrorist organizations, white supremacist groups and Nazis.

The following year, TTP identified dozens of white supremacist Pages that were auto-generated by Facebook. TTP has also documented how Facebook auto-generates Pages for militia groups, including those affiliated with the anti-government Three Percenters, whose followers have been indicted on charges related to the U.S. Capitol attack on Jan. 6, 2021.

Lawmakers have pressed Facebook executives on the platform’s creation of Pages for extremist groups. At a November 2020 hearing, Sen. Dick Durbin (D-Ill.) asked Zuckerberg about Facebook’s white supremacist content, including auto-generated material: “Are you looking the other way, Mr. Zuckerberg, in a potentially dangerous situation?”

The CEO didn’t directly address the auto-generation issue and instead recycled familiar Facebook talking points:

No Senator, this is incredibly important and we take hate speech as well as incitement of violence extremely seriously. We banned more than 250 white supremacist organizations and treat them the same as terrorist organizations around the world. And we’ve ramped up our capacity to identify hate speech and incitement of violence before people even see it on the platforms.

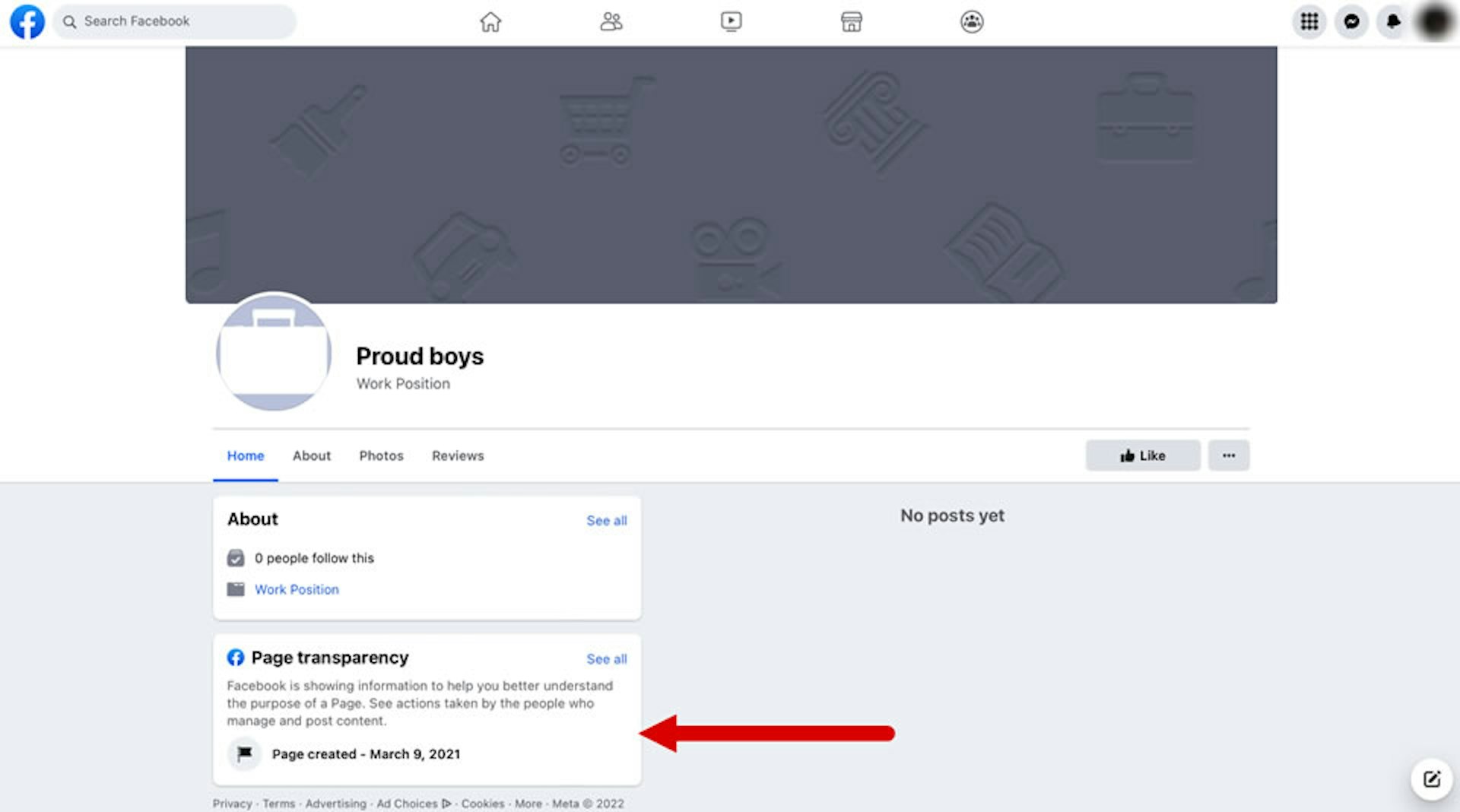

It's not clear if Facebook has done much—if anything—about this auto-generation problem. Case in point: TTP’s new investigation identified a Proud Boys Page that was generated by Facebook on March 9, 2021, weeks after Proud Boys members were indicted in connection with the Jan. 6 attack on the Capitol. Facebook created this Page even though it banned the far-right Proud Boys in October 2018.

As Facebook has come under increasing pressure over auto-generation of Pages, the company has removed various pieces of information about the practice from its website, TTP found.

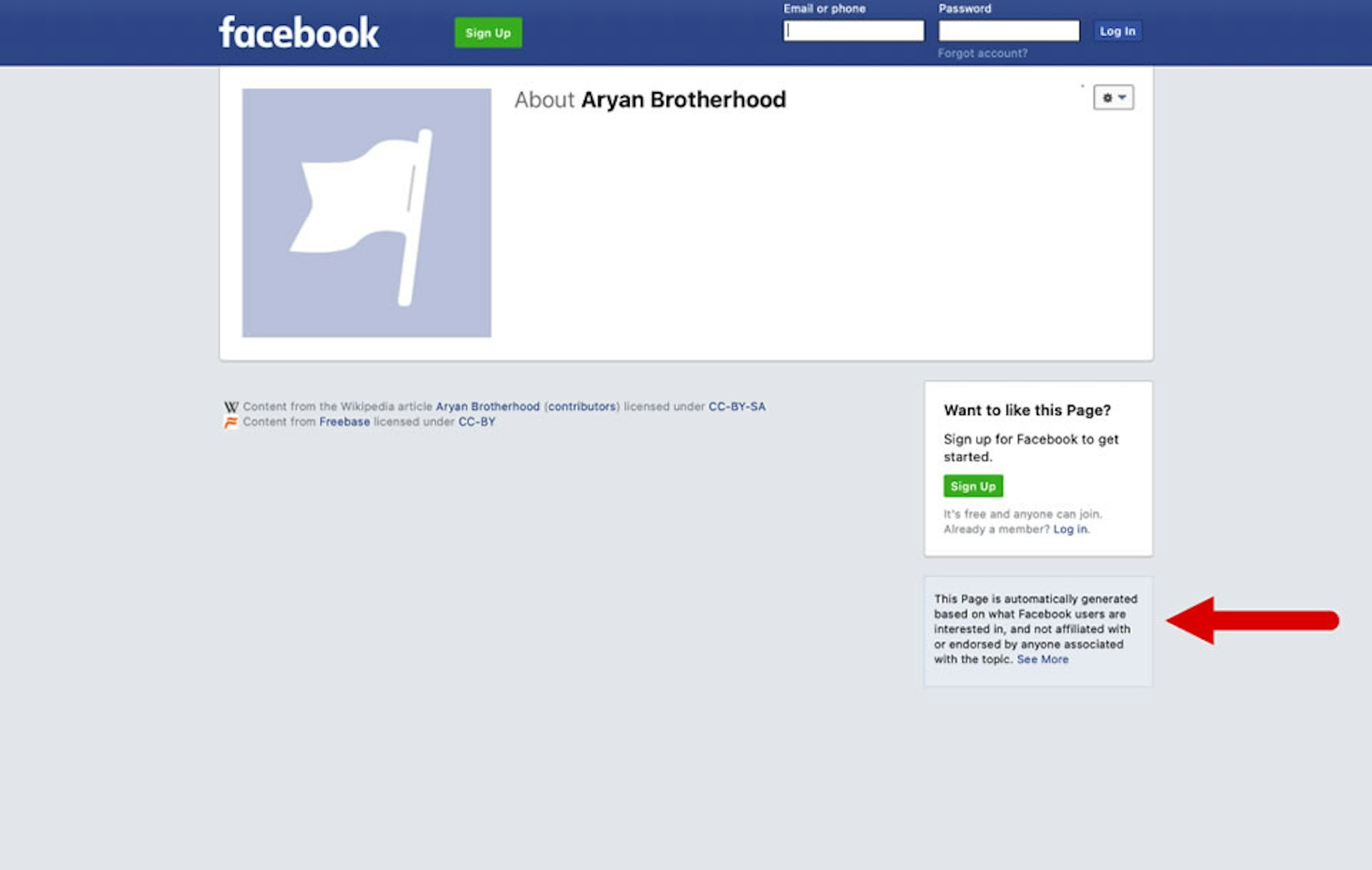

For example, Facebook has stopped labelling auto-generated Pages, making it harder for users to discern whether the platform created them. Facebook previously noted on the right bottom corner of an auto-generated Page’s cover image that it was an “Unofficial Page,” linking to an explanation that it was created because people on Facebook showed interest in that place or business. But the label appears to have disappeared from the auto-generated Pages following a major redesign of the platform’s interface that took place in 2020.

TTP discovered, however, that if a user is logged out, Facebook defaults to the platform’s old layout, which still includes a label on auto-generated content, as seen in the screenshot below.

Meanwhile, a Facebook help post on how to claim an auto-generated Pages has disappeared.

The help post read, in part:

A Page may exist for your business even if someone from your business didn’t create it. For example, when someone checks into a place that doesn't have a Page, an unmanaged Page is created to represent the location. A Page may also be generated from a Wikipedia article.

Facebook removed this post sometime after Jan. 7, 2020, which is the last date the content was captured in the Internet Archive. The platform’s help section no longer contains any mention of unofficial Pages.

It’s not clear why Facebook has made these changes, but auto-generation raises some legal liability questions for the company. Tech platforms including Facebook have relied heavily on a federal law, Section 230 of the Communications Decency Act (CDA), to shield them from lawsuits over third-party content posted by users. But a court or the federal government could determine in the future that Facebook’s auto-generation of hate content is not protected by Section 230, because Facebook is itself creating it.

Facebook appears to be alone among the major tech companies in engaging in auto-generation of content; TTP has found no other example of the practice on other platforms.

Related Pages: Facebook’s extremist echo chamber

Facebook’s algorithmic “Related Pages” feature suggests similar Pages to users to keep them engaged on the platform. But this feature has the potential to radicalize users who visit one extremist Page and get pushed toward other extremist material.

Using a new Facebook account and a clean browser, TTP “liked” the 119 Facebook Pages associated with white supremacist groups—and found that in 58% (69) of the cases, Facebook displayed Related Pages, often pointing to other extremist or hateful content.

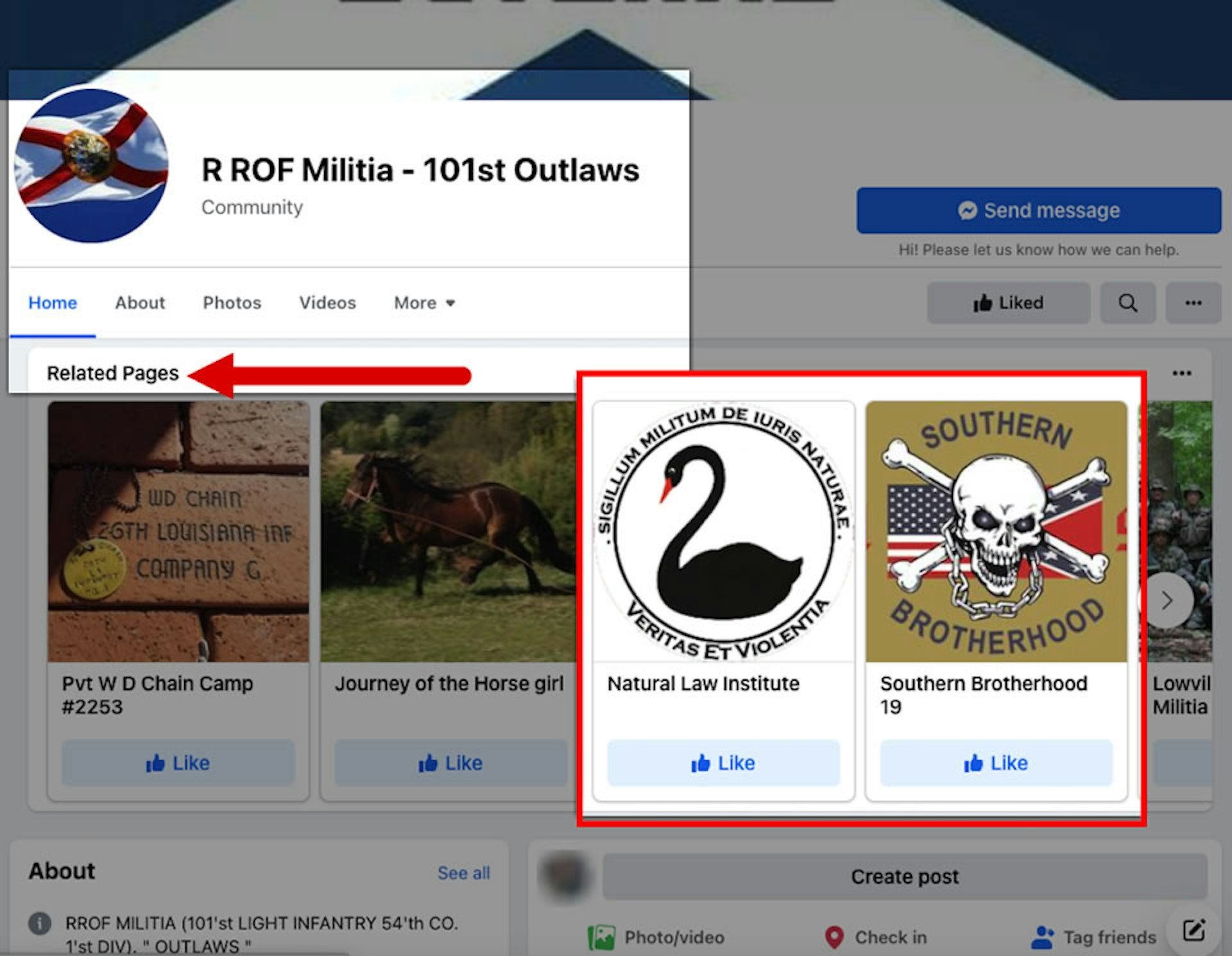

Take the Page for “R ROF Militia – 101st Outlaws.” The ROF in the name stands for “Republic of Florida,” a white supremacist group that appears on Facebook’s banned list. It generated Related Pages for the Southern Brotherhood white supremacist prison gang and the Natural Law Institute, which is linked to the Propertarian Institute, a self-described “anglo-conservative think tank” that is on Facebook’s banned hate groups list.

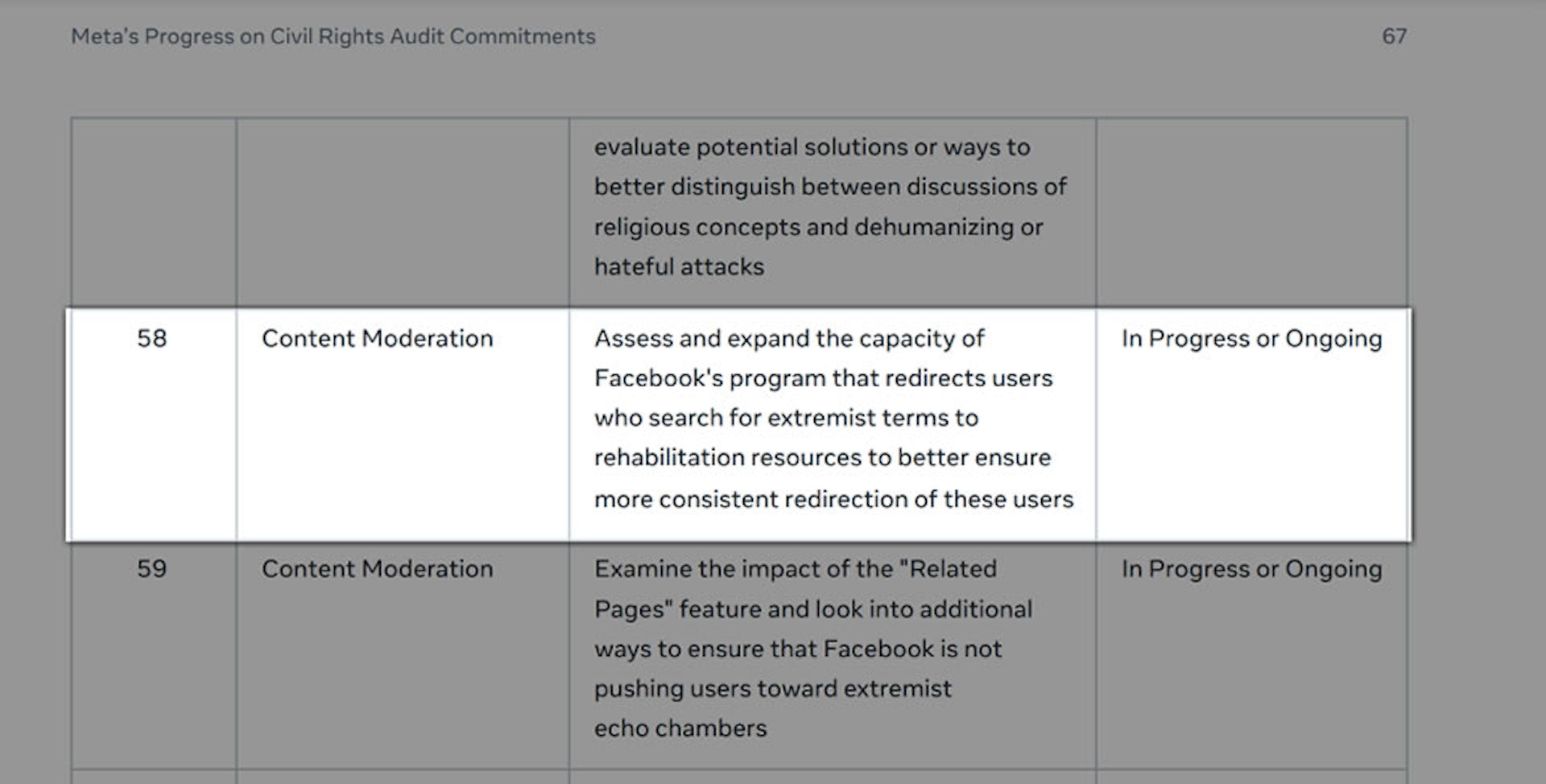

TTP first explored this Related Pages problem in its May 2020 report on Facebook and white supremacy. That same year, Facebook’s civil rights audit cited TTP’s findings and recommended that Facebook take action on the issue:

[T]he Auditors urge Facebook to further examine the impact of the [Related Pages] feature and look into additional ways to ensure that Facebook is not pushing users toward extremist echo chambers … [and] urge Facebook to take steps to ensure its efforts to remove hate organizations and redirect users away from (rather than toward) extremist organizations efforts are working as effectively as possible, and that Facebook’s tools are not pushing people toward more hate or extremist content.

But it’s not clear if Facebook has done anything to fix the problem. In November 2021, the company said its work to address the auditors’ recommendation on Related Pages was “In Progress or Ongoing,” more than a year after the audit was published.

Failing to direct away from hate

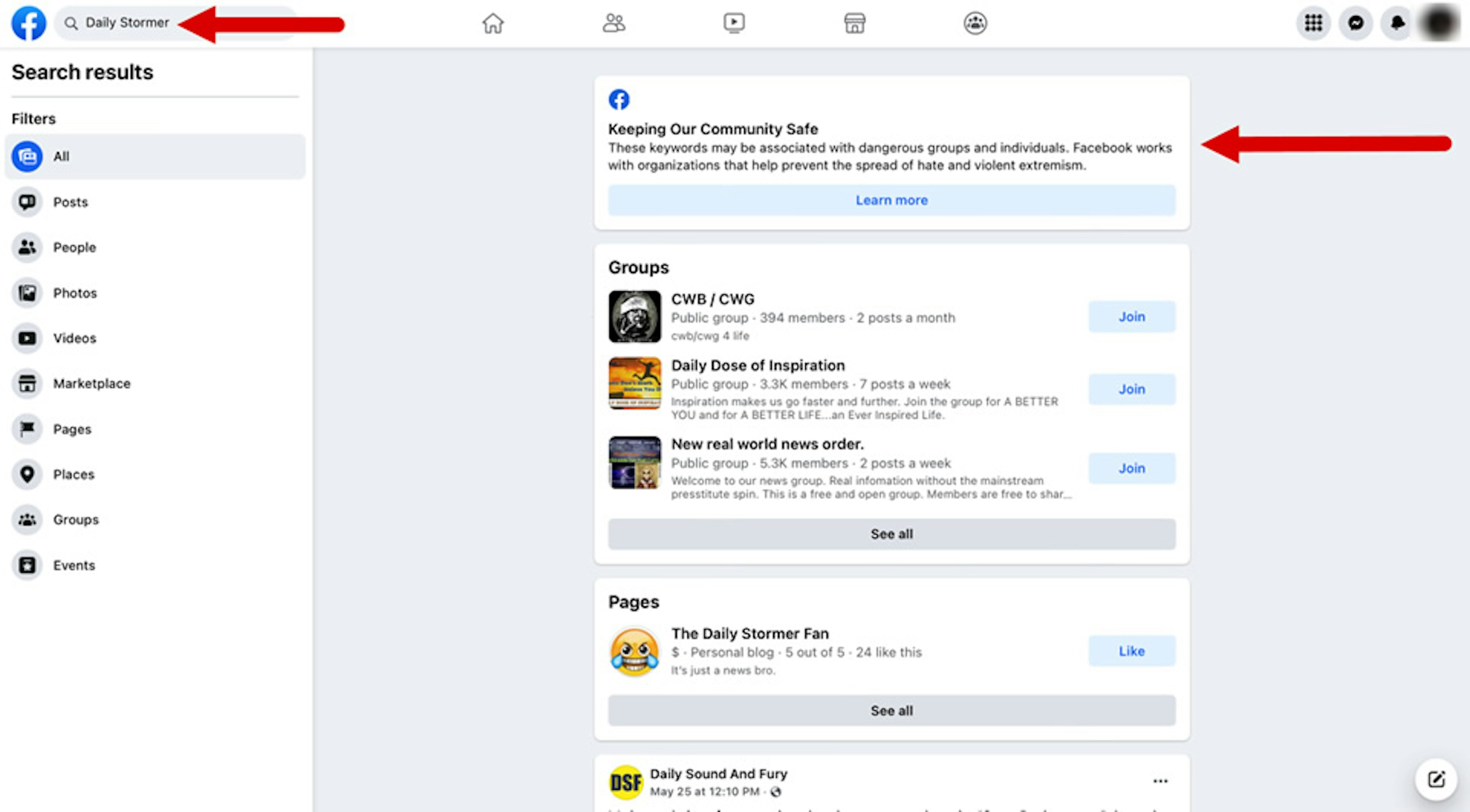

Facebook in March 2019 announced an effort to redirect users searching for white supremacy content to anti-hate resources. The move followed the Christchurch attack, in which a gunman used Facebook to livestream the massacre of 51 people at two mosques in New Zealand.

“Searches for terms associated with white supremacy will surface a link to Life After Hate’s Page, where people can find support in the form of education, interventions, academic research and outreach,” Facebook said at the time.

But TTP’s investigation found that only 14% (31) of Facebook searches for the 226 white supremacist organizations triggered this redirect link.

When the groups were limited to just those 78 categorized by Facebook as dangerous organizations, Facebook’s redirect rate was somewhat better—32% (25)—but the link still failed to surface in two-thirds of the searches.

Even groups with “Ku Klux Klan” in their names escaped the redirect effort. Of the 11 groups with Ku Klux Klan in the name, only one triggered the redirect to Life After Hate—on a search that was also monetized.

TTP’s May 2020 investigation of Facebook’s problems with white supremacy also found a low redirect rate on searches for such content (6%). The findings were cited in the company’s civil rights audit, which recommended that Facebook ensure the redirect program is applied more consistently: “The Auditors encourage Facebook to assess and expand capacity … to better ensure users who search for extremist terms are more consistently redirected to rehabilitation resources.”

Again, it’s not clear if Facebook has done anything on the issue. The company in November 2021 described its work on the redirect program as “In Progress or Ongoing.”

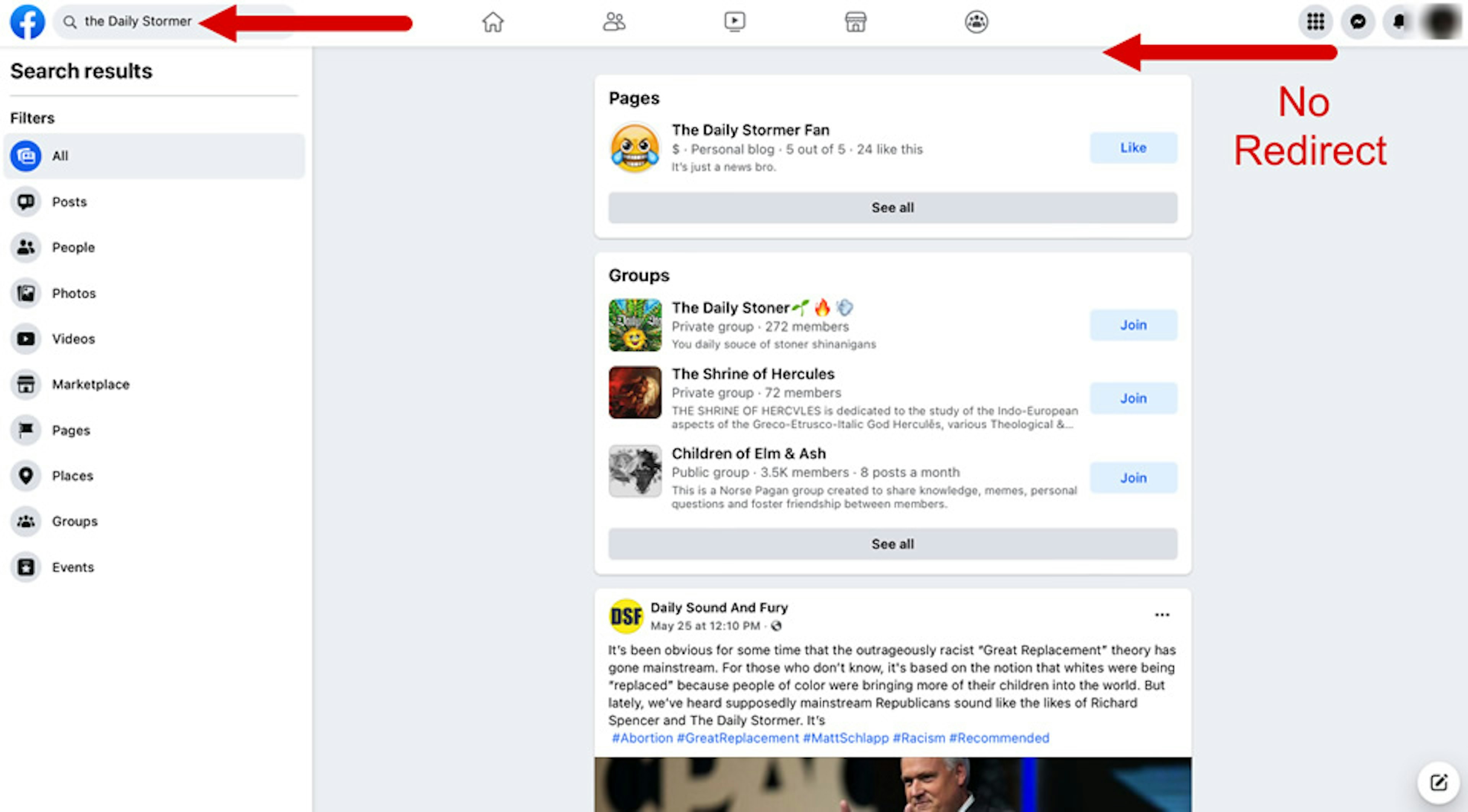

TTP’s research also found that Facebook’s redirects can sometimes be thrown off by tiny variations in the search terms.

For instance, a search for “Daily Stormer,” a neo-Nazi website listed by the ADL, SPLC, and Facebook itself, triggered a redirect to Life After Hate. But when our test user searched for “the Daily Stormer”—with a “the” in front of the name—it did not trigger the redirect link. The fact that redirects can be derailed by a stray article raises additional questions about the feature’s effectiveness.

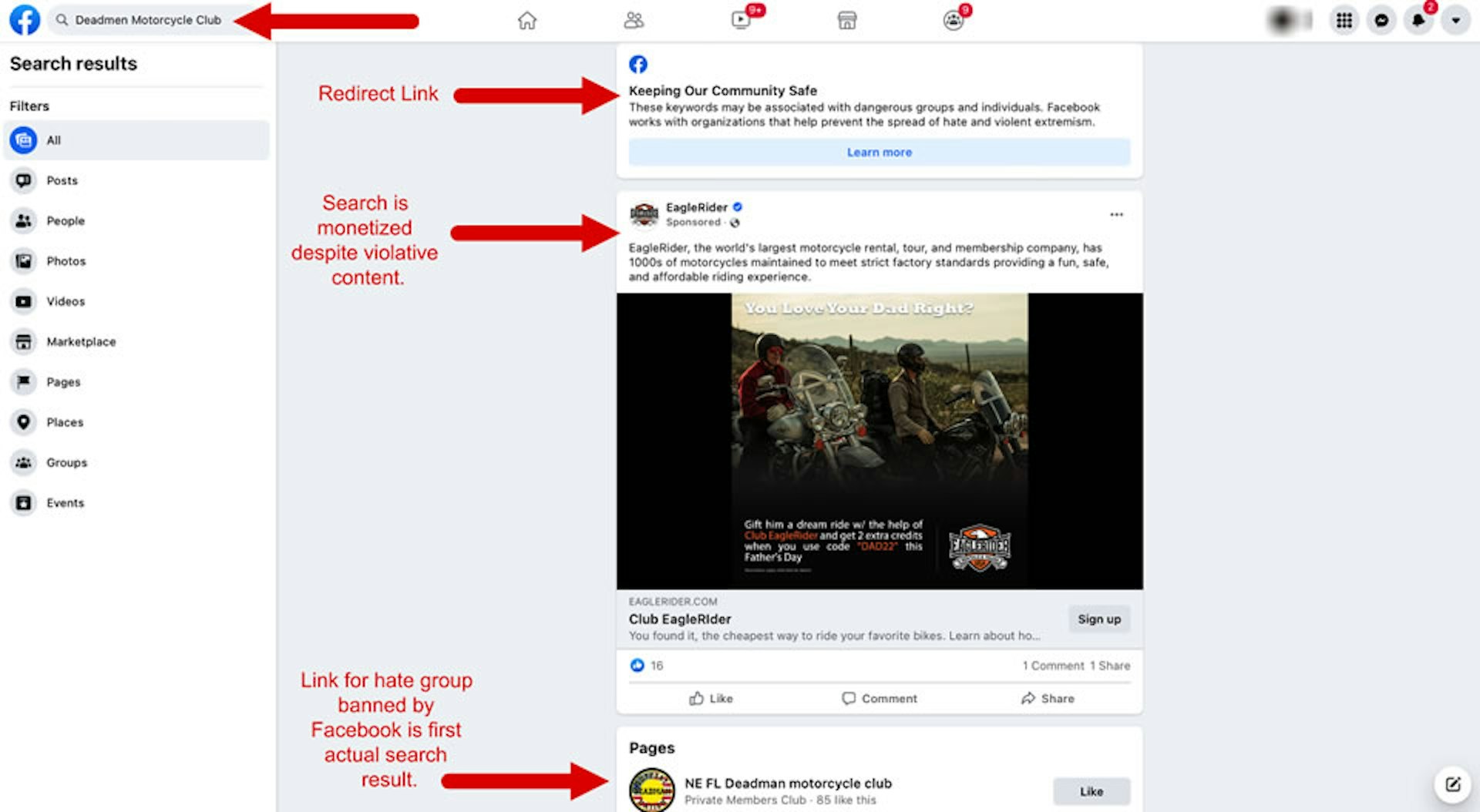

In some cases when Facebook redirected to Life After Hate, it still served up a link to the white supremacist group in question and monetized the search with ads, as seen in the example below. (The “Deadmen Motorcycle Club” is on Facebook’s banned list of “dangerous organizations.”)

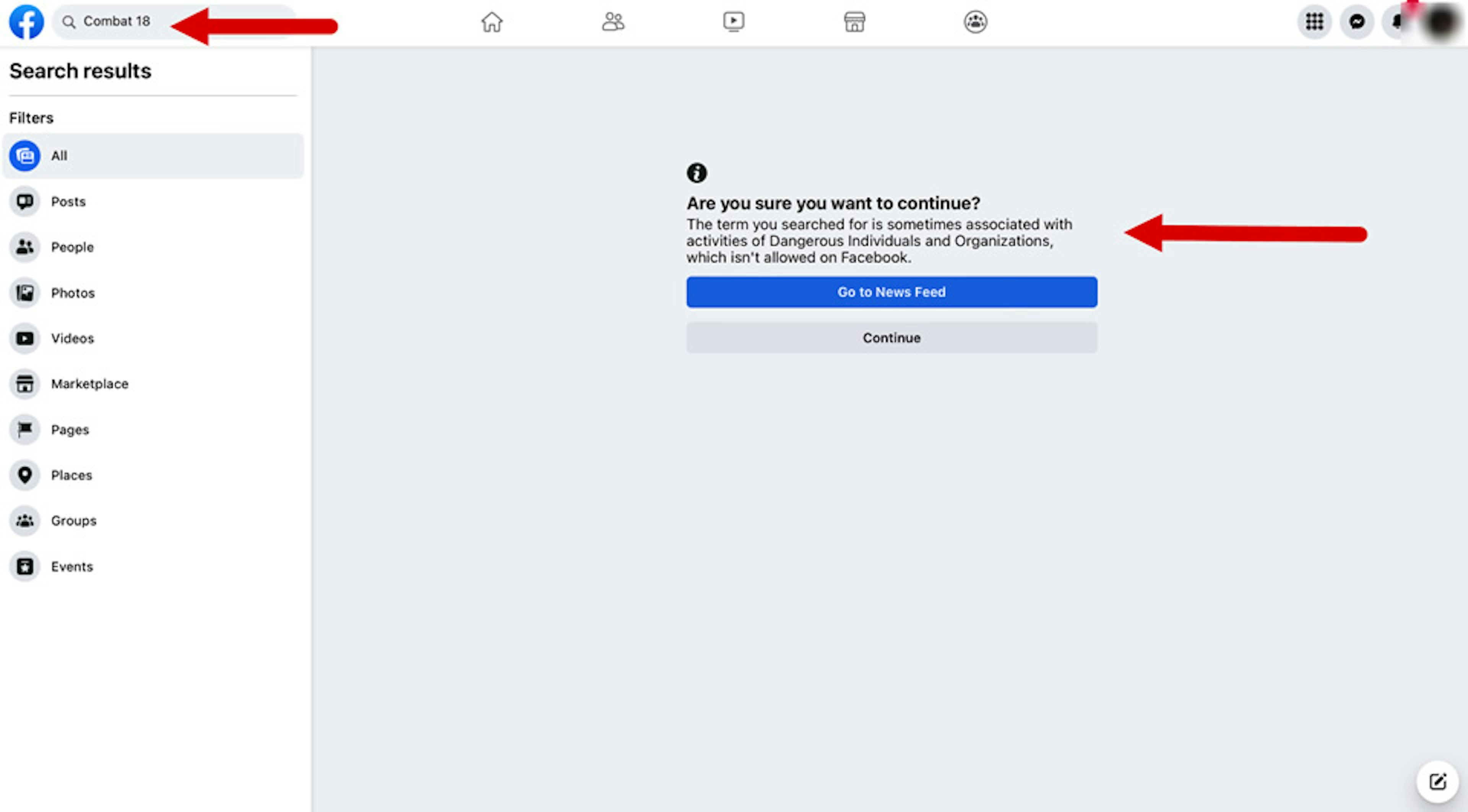

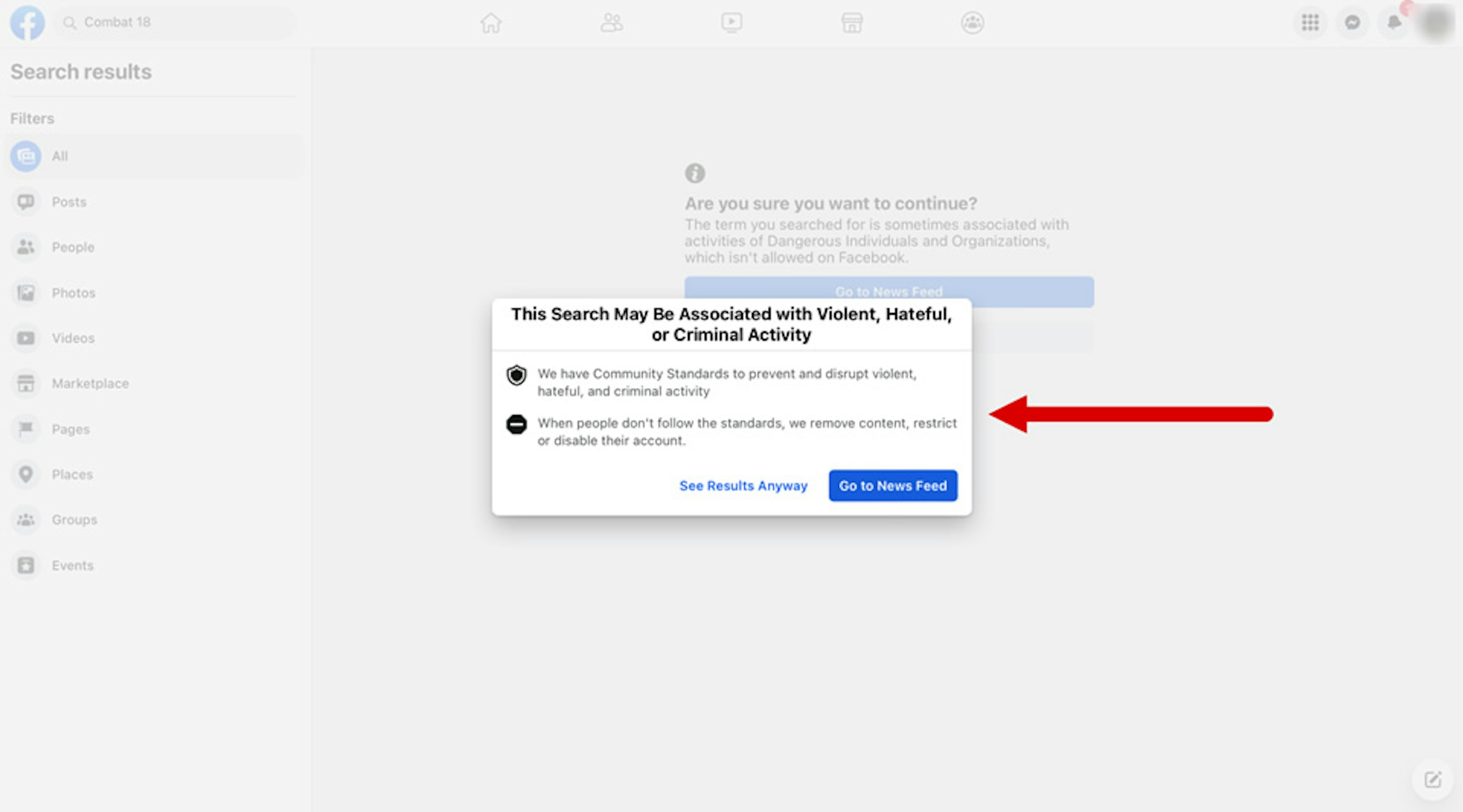

One of our searches for a white supremacist group made clear that Facebook has the capability to make its content warnings stronger—if it chooses to do so.

A search for “Combat 18”—a neo-Nazi hate group that “seeks to create white-only countries through violence,” according to the Counter Extremism Project—triggered multiple layers of warnings that the user had to click through to get the search results. The messages included “Are you sure you want to continue?” and “This Search May Be Associated with Violent, Hateful, or Criminal Activity.”

This added level of friction appears designed to discourage users from accessing white supremacist-related content. It's unclear why Facebook only applied this method to one of the 226 white supremacist groups in TTP’s investigation.

Conclusion

Despite numerous warnings about its role in promoting extremism, Facebook has failed to effectively address the presence of white supremacist organizations on its platform. To make matters worse, the company is often monetizing searches for these hate groups, profiting off them through advertising.

In some cases, Facebook is automatically generating Pages for white supremacist organizations, and it’s amplifying hateful content through its algorithmic Related Pages feature.

Facebook says it bans white supremacist organizations. But these findings make clear that the company is more focused on profit than it is on removing hate groups and hateful ideologies that it has promised to purge from its platform.

More on methodology

TTP used ADL’s glossary of white supremacist terms and movements to identify relevant groups in the Hate Symbols Database. With the SPLC Hate Map, TTP used the 2021 map categories of Ku Klux Klan, neo-Confederate, neo-Nazi, neo-völkisch, racist skinhead, and white nationalist to identify relevant groups. TTP also included white supremacist groups from the hate category of Facebook’s list of banned dangerous individuals and organizations. Thirty-one of the 78 Facebook-listed groups are also designated by the ADL and/or the SPLC. Each group was only searched one time even if listed by multiple organizations.

TTP’s report on Facebook and white supremacists in May 2020 used a similar methodology, but did not include the groups from Facebook’s dangerous organizations list, which wasn’t available at the time. Facebook has never officially released that list—despite a recommendation by its own Oversight Board to do so—though The Intercept made it public in October 2021. It’s not clear if Facebook has added or removed groups from its list since then.

1Regarding the visualization above, TTP found that white supremacist groups were associated with 119 Facebook Pages. Of those Pages, 69 recommended other forms of content through the Related Pages feature.

Using a clean instance of the Chrome browser and a new Facebook account, TTP visited the 69 Pages and recorded the recommended Related Pages. As the visualization shows, the Related Pages frequently directed people to other forms of extremist content, in some cases to other organizations designated as hate groups by the Southern Poverty Law Center, the Anti-Defamation League, and even Facebook itself.