Dozens of white supremacist groups are operating freely on Facebook, allowing them to spread their message and recruit new members, according to a Tech Transparency Project (TTP) investigation, which found the activity is continuing despite years of promises by the social network that it bans hate organizations.

TTP recently documented how online extremists, including many with white supremacist views, are using Facebook to plan for a militant uprising dubbed the “boogaloo,” as they stoke fears that coronavirus lockdowns are a sign of rising government repression. But TTP’s latest investigation reveals Facebook’s broader problems with white supremacist groups, which are using the social network’s unmatched reach to build their movement.

The findings, more than two years after Facebook hosted an event page for the deadly “Unite the Right” rally in Charlottesville, Virginia, cast doubt on the company’s claims that it’s effectively monitoring and dealing with hate groups. What’s more, Facebook’s algorithms create an echo chamber that reinforces the views of white supremacists and helps them connect with each other.

With millions of people now quarantining at home and vulnerable to ideologies that seek to exploit people’s fears and resentments about Covid-19, Facebook’s failure to remove white supremacist groups could give these organizations fertile new ground to attract followers.

Facebook’s Community Standards prohibit hate speech based on race, ethnicity, and other factors because it “creates an environment of intimidation and exclusion and in some cases may promote real-world violence.” The company also bans hate organizations. Since the Charlottesville violence, Facebook has announced the removal of specific hate groups and tightened restrictions on white extremist content on the platform.

“We do not allow hate groups on Facebook, overall,” CEO Mark Zuckerberg told Congress in April 2018. “So, if — if there's a group that — their primary purpose or — or a large part of what they do is spreading hate, we will ban them from the platform, overall.”

To test those claims, TTP conducted searches on Facebook for the names of 221 white supremacist organizations that have been designated as hate groups by the Southern Poverty Law Center (SPLC) and the Anti-Defamation League (ADL), two leading anti-hate organizations.

The analysis found:

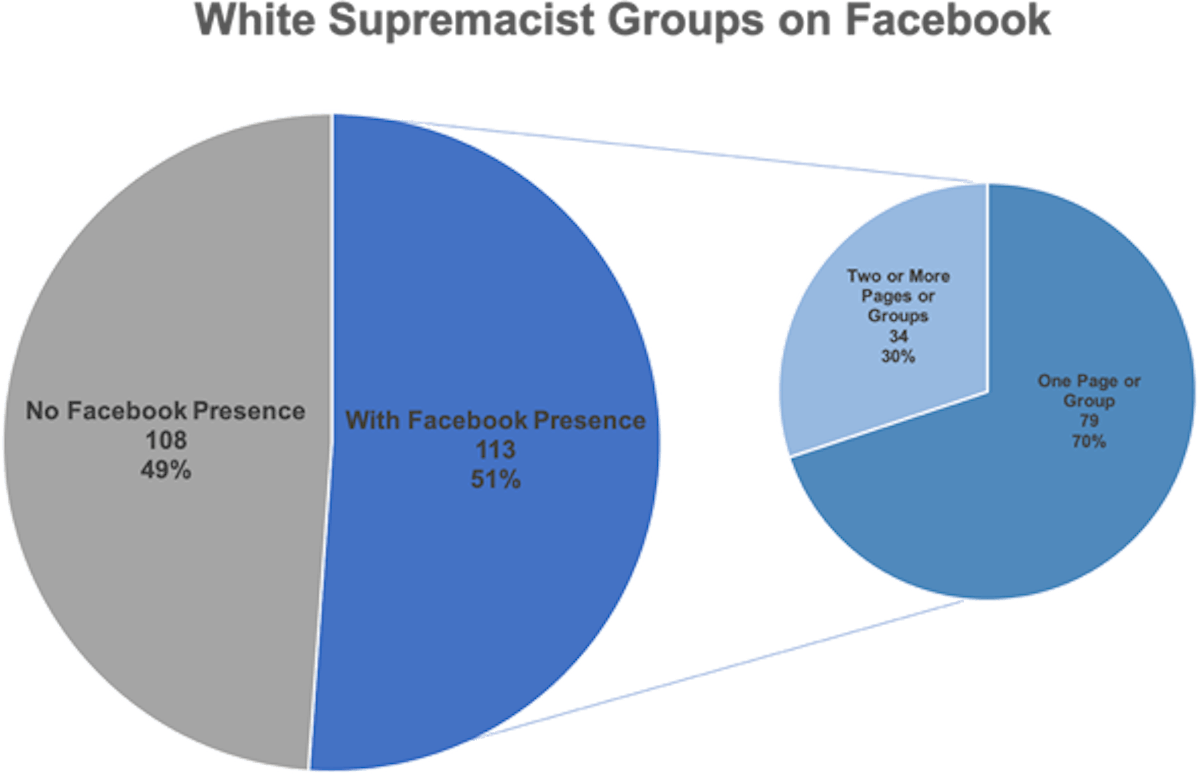

- Of the 221 designated white supremacist organizations, more than half—51%, or 113 groups—had a presence on Facebook.

- Those organizations are associated with a total of 153 Facebook Pages and four Facebook Groups. Roughly one third of the organizations (34) had two or more Pages or Groups on Facebook. Some had Pages that have been active on the platform for a decade.

- Many of the white supremacist Pages identified by TTP were created by Facebook itself. Facebook auto-generated them as business pages when someone listed a white supremacist or neo-Nazi organization as their employer.

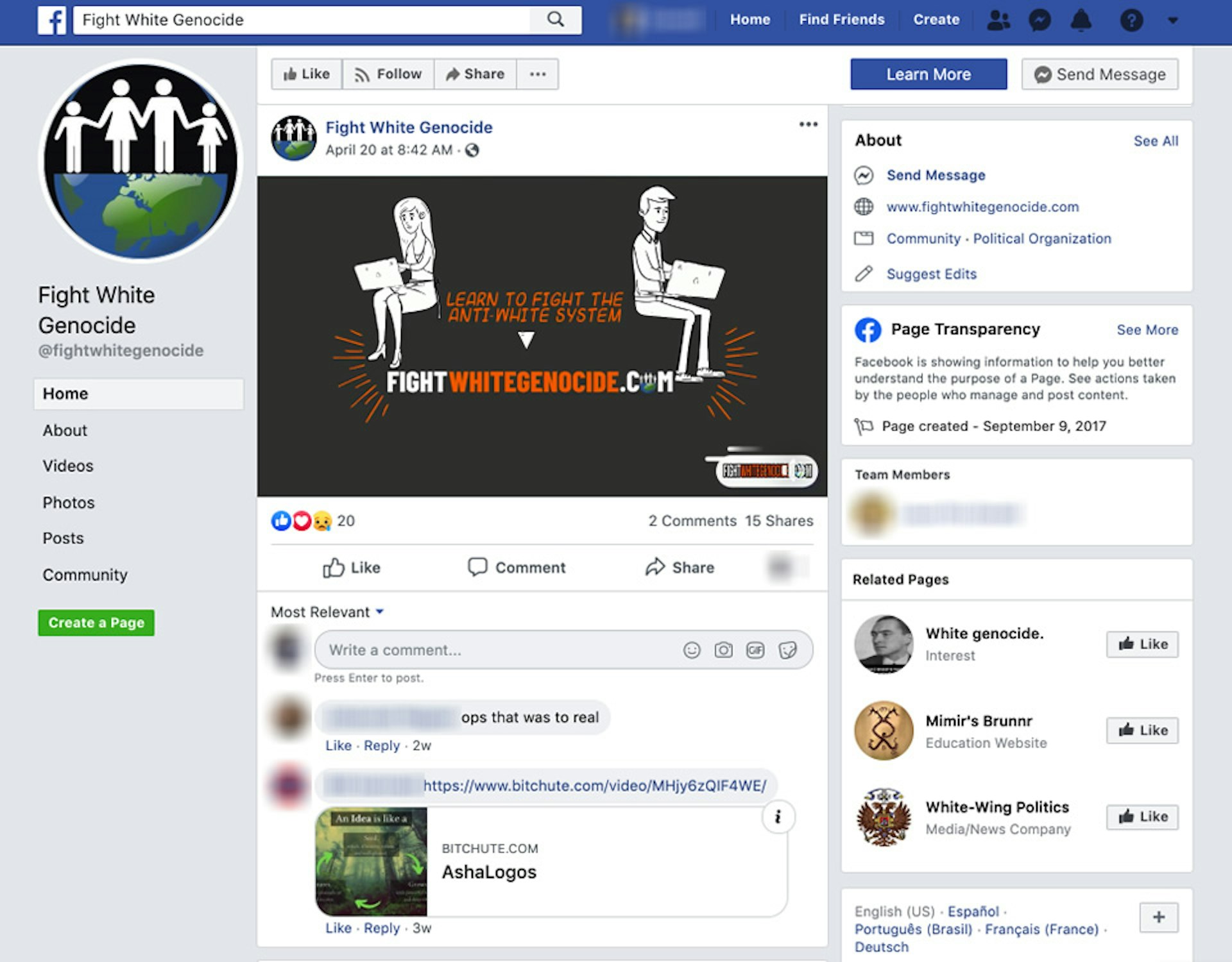

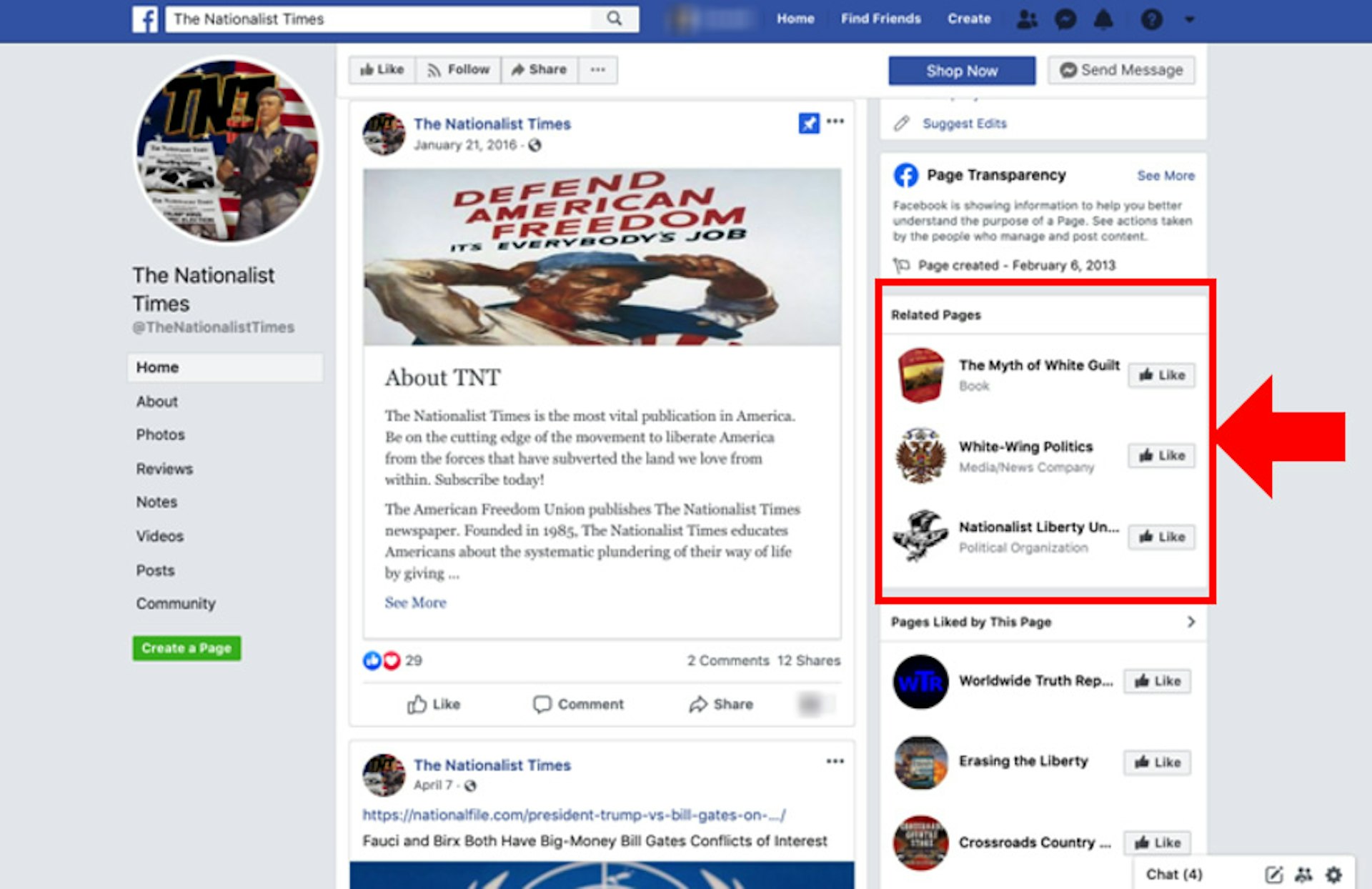

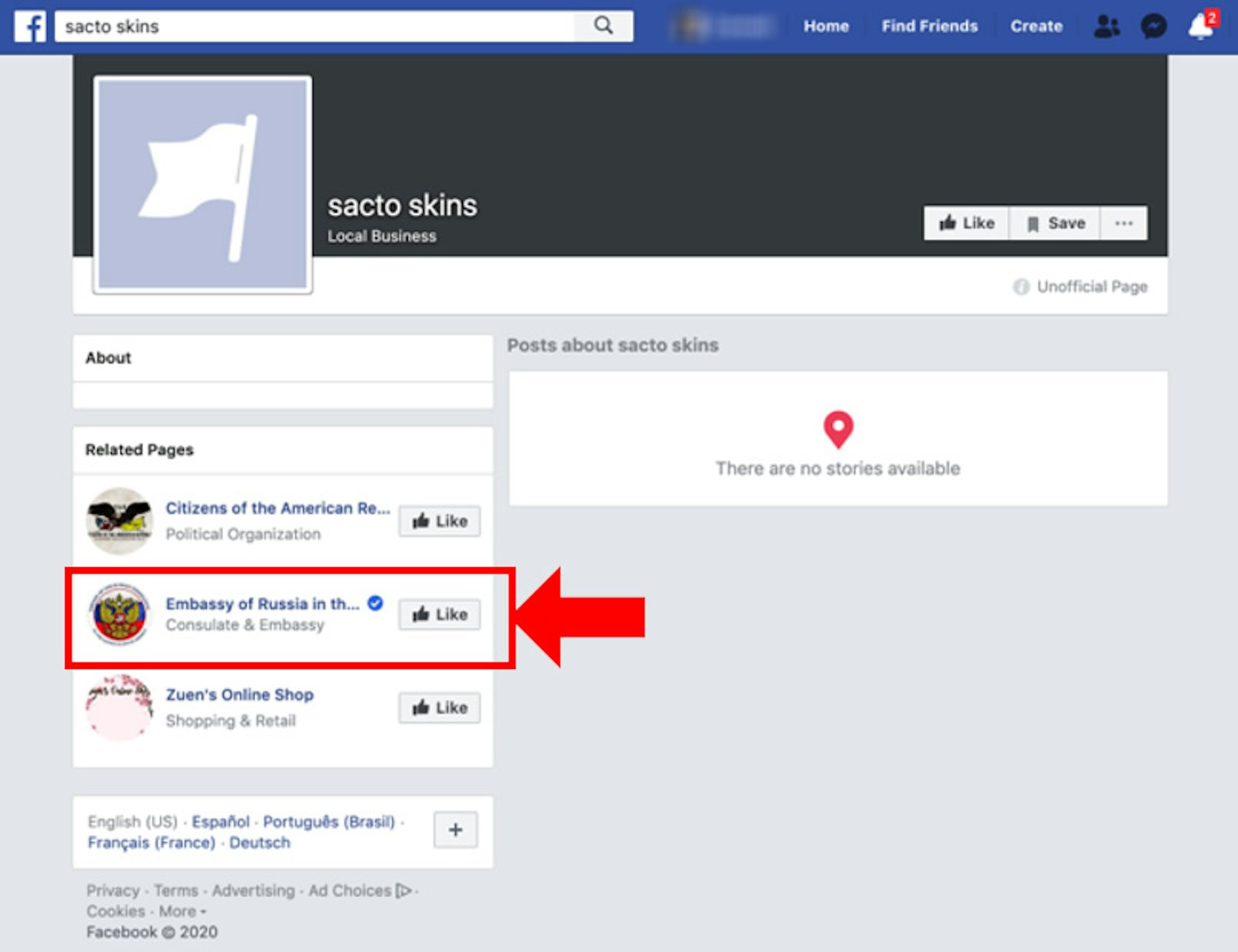

- Facebook’s “Related Pages” feature often directed users visiting white supremacist Pages to other extremist or far-right content, raising concerns that the platform is contributing to radicalization.

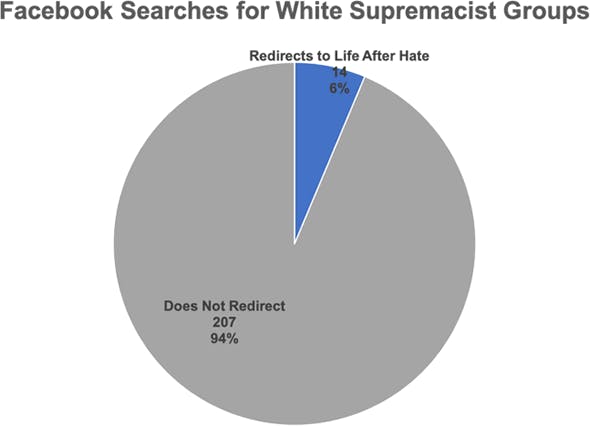

- One of Facebook’s strategies for combatting extremism—redirecting users who search for terms associated with white supremacy or hate groups to the Page for “Life After Hate,” an organization that promotes tolerance—only worked in 6% (14) of the 221 searches for white supremacist organizations.

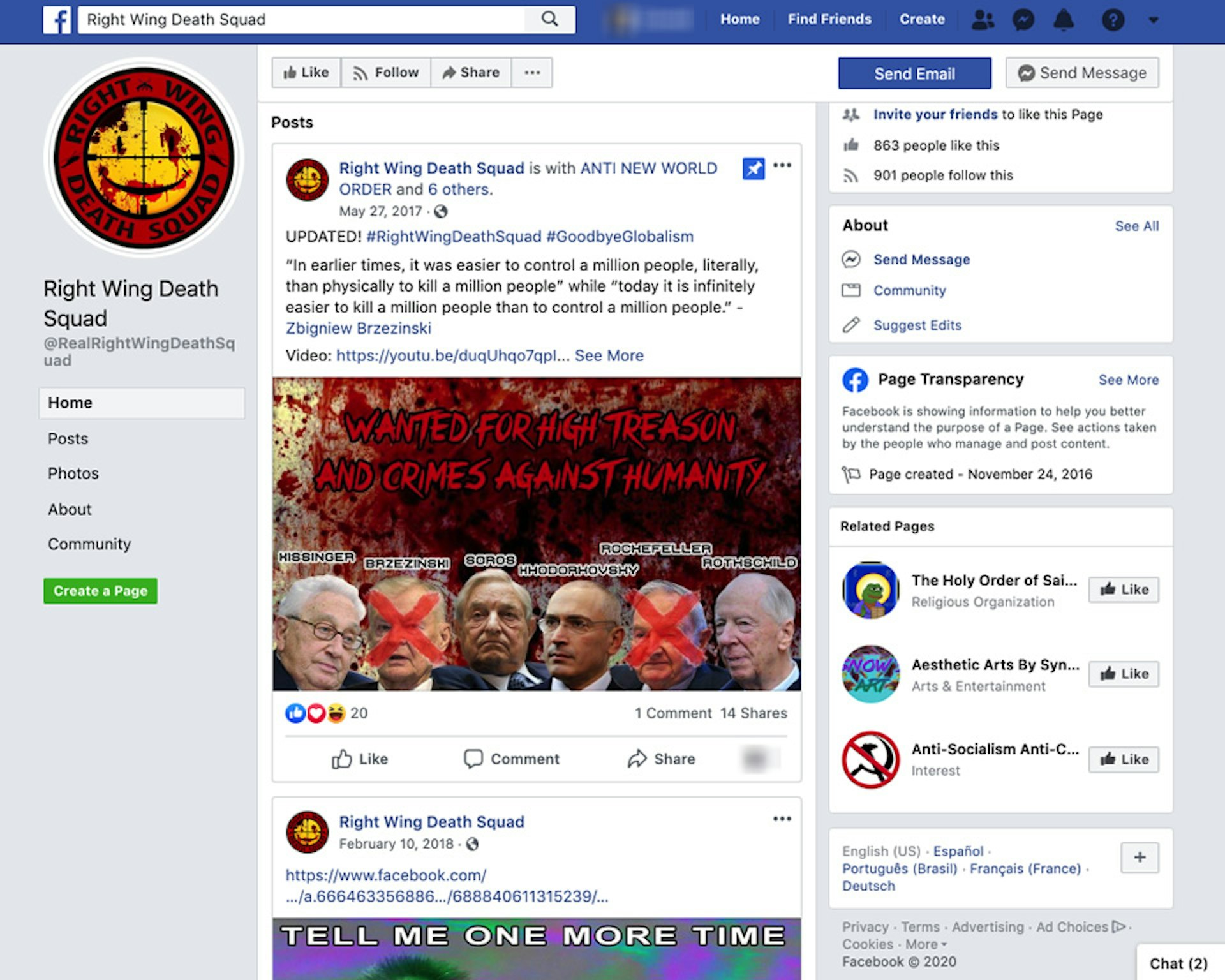

- In addition to the hate groups designated by SPLC and ADL, TTP found white supremacist organizations that Facebook had explicitly banned in the past. One known as “Right Wing Death Squad” had at least three Pages on Facebook, all created prior to Facebook’s ban.

TTP created a visualization to illustrate how Facebook’s Related Pages connect white supremacist groups with each other and with other hateful content.1 Hover your mouse over any node to show details or use the drop-down menu to highlight a specific group in our dataset.

Facebook is Creating Pages for Hate Groups

TTP examined the Facebook presence of 221 hate groups affiliated with white supremacy. The groups were identified via the Anti-Defamation League’s (ADL) Hate Symbols Database and the Southern Poverty Law Center’s (SPLC) 2019 Hate Map, an annual census of hate groups operating in the U.S.

TTP used ADL’s glossary of white supremacist terms and movements to identify relevant groups in the Hate Symbols Database. With the SPLC Hate Map, TTP used the 2019 map categories of Ku Klux Klan, neo-Confederate, neo-Nazi, neo-völkisch, racist skinhead, and white nationalist to identify relevant groups. Of the 221 groups identified by TTP, 21 were listed in both the ADL and SPLC databases.

TTP found that 51% (113) of the organizations examined had a presence on Facebook in the form of Pages or Groups. Of the 113 hate groups with a presence, 34 had two or more associated Pages on Facebook, resulting in a total of 153 individual Pages and four individual Groups.

Roughly 36% (52 Facebook Pages and four Facebook Groups) of the content identified was created by users. One user-generated Page for a group designated as white nationalist by SPLC had more than 42,000 “likes” on Facebook and has been active since 2010.

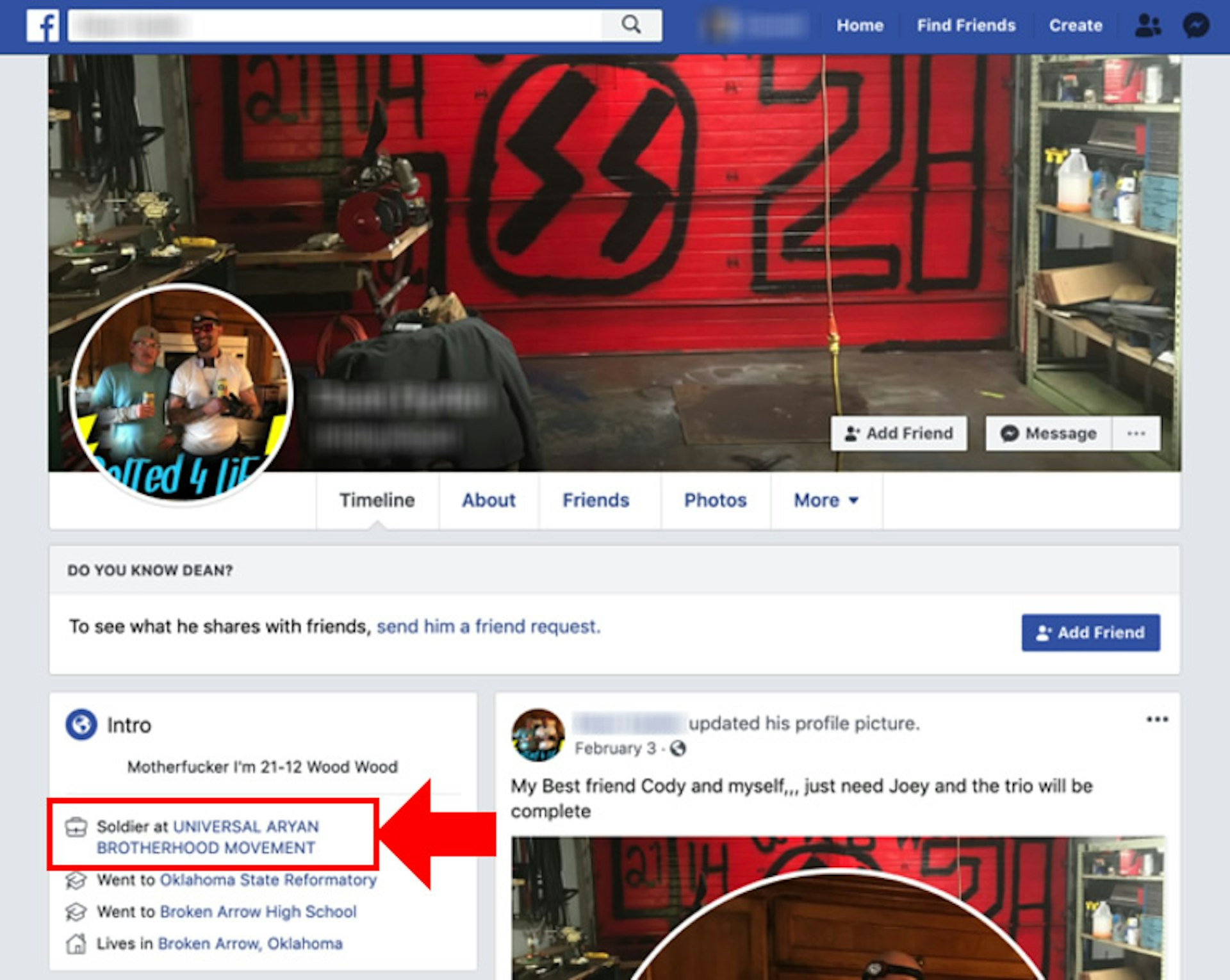

The remaining 64% of the white supremacy content identified by TTP involved Pages that had been auto-generated by Facebook. These Pages are automatically created by Facebook when a user lists a job in their profile that does not have an existing Page. When a user lists their work position as “Universal Aryan Brotherhood Movement,” for instance, Facebook generates a business page for that group.

Since the publication of this report, Facebook has removed 137 of the 153 white supremacist Pages identified by TTP, including all but two of the auto-generated Pages. All of the Facebook Groups identified by TTP, however, remained active.

The auto-generation problem has existed for some time. In April 2019, an anonymous whistleblower filed a Securities and Exchange Commission (SEC) petition regarding extremism on the platform and Facebook’s practice of auto-generating business pages for terrorist and white supremacist groups. Some of these Facebook-generated Pages gained thousands of “likes,” giving a way for the groups to identify potential recruits, according to the whistleblower.

One of the auto-generated hate group Pages with the most “likes” in TTP’s analysis was for the Council of Conservative Citizens, an SPLC-designated white nationalist group. The group made headlines in 2015 after an online manifesto linked to white supremacist Dylann Roof referenced the organization; Roof opened fire at a historically black church in South Carolina, killing nine people. Facebook’s auto-generated Page for the Council of Conservative Citizens included a description of the group’s white supremacist affiliations, complete with a direct link to their website.

Facebook’s role creating Pages for organizations like these undermines claims by the company that it bars hate groups.

"Our rules have always been clear that white supremacists are not allowed on our platform under any circumstances."

— Neil Potts, Facebook public policy director

Related Pages: Facebook’s Extremist Echo Chamber

The TTP review highlights flaws in Facebook’s content moderation system, which relies heavily on artificial intelligence (AI) and Facebook users to report problematic content to human moderators for review.

Relying on users to identify objectionable material doesn’t work well when the platform is designed to connect users with shared ideologies, experts have noted, since white supremacists are unlikely to object to racist content they see on Facebook. “A lot of Facebook’s moderation revolves around users flagging content. When you have this kind of vetting process, you don’t run the risk of getting thrown off Facebook,” according to SPLC research analyst Keegan Hankes.

Artificial intelligence, which Facebook has touted for years as the solution to identifying and removing bad content, also has limitations when it comes to hate speech. AI can miss deliberate misspellings; manipulation of words to include numbers, symbols, and emojis; and missing spaces in sentences. Neo-Nazis, for example, have managed to avoid detection through simple measures like replacing “S” with “$.”

At the same time, Facebook’s algorithms can create an echo chamber of white supremacism through its “Related Pages” feature, which suggests similar Pages to keep users engaged on a certain topic. TTP’s investigation found that among the 113 hate groups that had a Facebook presence, 77 of them had Pages that displayed Related Pages, often pointing people to other extremist or right-wing content. In some cases, the Related Pages directed users to additional SPLC- or ADL-designated hate groups.

For example, TTP found that the user-generated Page for Nazi Low Riders, an ADL-listed hate group, showed Related Pages for other groups associated with white supremacy. The top recommendation was another user-generated Page called “Aryanbrotherhood.” (By omitting the space between the two words, the Page may have been trying to evade Facebook’s AI systems, as discussed above.) The Aryan Brotherhood is “the oldest and most notorious racist prison gang in the United States,” according to ADL.

The Aryanbrotherhood Facebook Page in turn displayed Related Pages for more white supremacist ideologies, some of them making reference to “peckerwoods,” a term associated with racist prison and street gangs.

The Related Pages listed on the user-generated Page of American Freedom Union, an SPLC-designated white nationalist group, included a link to a Page for the book “White Identity: Racial Consciousness in the 21st Century.” The book was authored by Jared Taylor, who runs the website for American Renaissance, another SPLC-designated white nationalist group.

Facebook’s algorithms even pick up on links between organizations that may not be obvious to others. For example, the auto-generated Page for Sacto Skins, a short form of the SPLC-designated racist hate group Sacto Skinheads, included a Related Page recommendation for Embassy of Russia in the United States. A recent investigation by The New York Times found that Russian intelligence services are using Facebook and other social media to try to incite white supremacists ahead of the 2020 election.

This web of white supremacist Pages surfaced by Facebook’s algorithms is not new. The non-profit Counter Extremism Project, in a 2018 report about far-right groups on Facebook, identified multiple white supremacist and far-right Pages by following the Related Pages feature.

Banned Groups Persist

Facebook’s Community Standards have included rules against hate speech for years, but in the past three years the company has expanded its efforts.

One significant change came quietly in 2017, following mounting reports about white supremacist activity on Facebook. The company didn’t publicly announce a policy change, but the Internet Archive shows that in mid-July, it added “organized hate groups” to the “Dangerous Organizations” section of its Community Standards. (The change can be seen from here to here.) The company did not, however, specify how it would define such hate groups.

Unite the Right rally participants preparing to enter Lee Park in Charlottesville, Virginia on August 12, 2017. Photo by Anthony Crider.

Despite the policy update, Facebook didn’t immediately take down an event page for the “Unite the Right” rally, which SPLC had tied to neo-Nazis. According to one media report, Facebook only pulled the listing the day before the rally, in which one woman was killed and more than a dozen others injured when a white supremacist drove into a crowd of counter-protestors in Charlottesville.

Amid the ensuing public outcry, Facebook announced removals of a number of hate groups including White Nationalists United and Right Wing Death Squad.

Facebook scrambled again in early 2019 following the Christchurch attack, in which a gunman used Facebook to stream the massacre of 51 people at a pair of mosques in New Zealand. As the killings made headlines around the world, the company said it would ban “white nationalist” content along with the previously banned category of white supremacism. Facebook Chief Operating Officer Sheryl Sandberg also said a handful of hate groups in Australia and New Zealand would be banned.

Two months after the New Zealand attack, however, BuzzFeed News found that extremist groups Facebook claimed to have banned were still on the platform. Later that year, The Guardian identified multiple white nationalist Pages on Facebook but said the company “declined to take action against any of the pages identified.” Online extremism expert Megan Squire told BuzzFeed, “Facebook likes to make a PR move and say that they’re doing something but they don’t always follow up on that.”

Research suggests there continues to be a gap between Facebook’s public relations responses and the company’s enforcement of its own policies. A recent report by TTP found that videos of the Christchurch attack continued to circulate on the platform a year later, despite Facebook’s vow to remove them.

Since 2017, Facebook announced removals of at least 14 white supremacist and white nationalist groups in the U.S. and Canada, according to media reports tallied by TTP. (Only one of these groups, Vanguard America, is included in the TTP’s review of 221 white supremacist groups named by the SPLC and ADL.) Of the 14 groups, four continue to have an active presence on Facebook: Awakening Red Pill, Wolves of Odin, Right Wing Death Squad, and Physical Removal.

TTP identified three user-generated Pages for Right Wing Death Squad that are currently active on Facebook. All three Pages identified by TTP were created before the Unite the Right rally and were never removed by Facebook.

The Right Wing Death Squad Pages include extremist language as well as references to the “boogaloo,” the term used by extremists to reference a coming civil war. Some of the Right Wing Death Squad Pages brand themselves as anti-globalist, a term often considered a dog whistle for anti-Semitism.

In March 2020, Facebook announced the removal of a network of white supremacists linked to the Northwest Front, an SPLC-designated hate group that has been called “the worst racists” in America. Facebook’s director of counterterrorism Brian Fishman said the action came after the group, which had been banned for years, tried to “reestablish a presence” on the platform. TTP, however, found that the auto-generated Page for Northwest Front was not removed and that searches for the group’s name on Facebook still fail to trigger the company’s re-direct effort to Life After Hate.

Facebook also said it removed a network of accounts linked to the VDARE, an SPLC-designated white nationalist group, and individuals associated with a similar website called The Unz Review, in April 2020. Facebook said the group had engaged in “suspected coordinated inauthentic behavior ahead of the 2020 election,” and described VDARE’s anti-immigrant focus without mentioning its link to white nationalism. According to Facebook, the network spent a total of $114,000 on advertising through the platform.

As with the action against the Northwest Front, Facebook failed to remove the auto-generated VDARE Page. Clicking on the Page’s link to the VDARE website generates a notice that states, “The link you tried to visit goes against our Community Standards.” Still, it is unclear why Facebook allows the auto-generated Page to stay up when it acknowledges the group violates its Community Standards.

Failing to Direct Away from Hate

As part of Facebook’s expanded efforts to combat white supremacy on the platform following the Christchurch attack, the company said in March 2019 that it would re-direct users who search for terms related to hate.

“Searches for terms associated with white supremacy will surface a link to Life After Hate’s Page, where people can find support in the form of education, interventions, academic research and outreach,” the company announced.

TTP found that not only did Facebook’s anti-hate link fail to surface in the majority of hate group searches, but in some cases, the platform directed users to other white supremacist Pages.

TTP conducted a search for each of the 221 hate groups associated with white supremacy and white nationalism listed by SPLC and ADL. Only 6% of the searches (14 groups) surfaced the link to Life After Hate.

One factor may be that not all of the hate groups listed by SPLC and ADL make their ideologies obvious in their names. But even organizations that have “Nazi” or “Ku Klux Klan” in their names escaped the redirect effort. Of 25 groups with “Ku Klux Klan” in their official name, only one triggered the link to anti-hate resources.

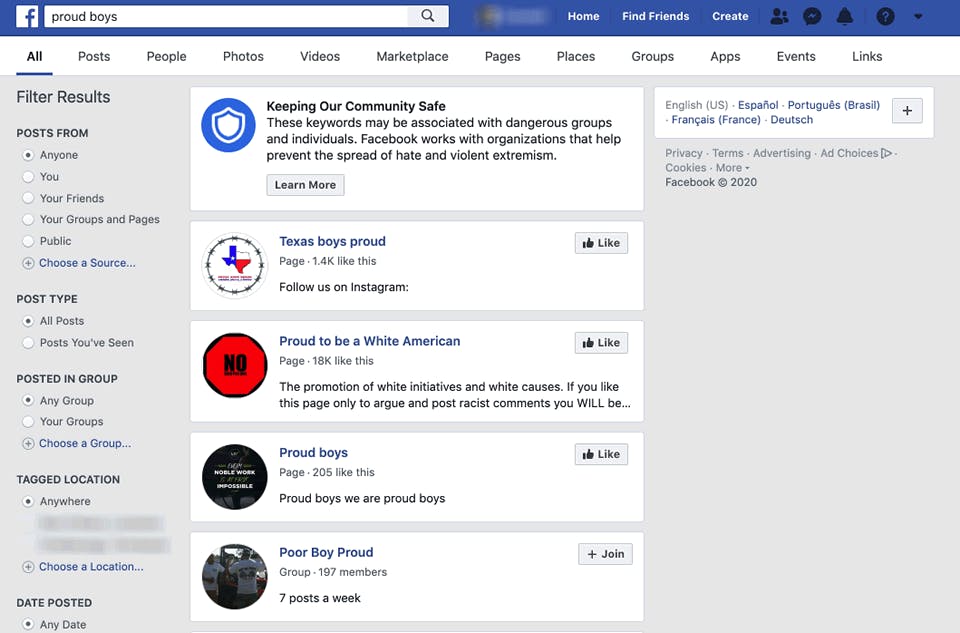

The redirect tool even failed to work on groups that Facebook has explicitly banned. TTP used Facebook’s search function to search the names of the 14 white supremacist groups in North America that Facebook said it had banned. The Proud Boys were the only one of the groups to trigger the platform’s Life After Hate link.

Facebook began removing accounts and pages linked to the far-right Proud Boys in October 2018 after members of the group clashed with anti-fascist protestors. Searches for the group today generate Facebook’s Life After Hate link, and TTP did not find any official Proud Boys Pages on the platform.

But the Facebook search for Proud Boys did bring up a Page for “Proud to be a White American,” which describes itself as being for “The promotion of white initiatives and white causes.” (Notably, the “Proud to be a White American” Page is listed above a Page called “Proud Boys” that does not appear to be affiliated with the far-right group.)

Note: Updated to reflect that Facebook took down some of the Pages identified by TTP following publication of this report. (Latest updated figures as of June 7, 2020.)

1Regarding the visualization above, TTP identified 113 white supremacist groups with a presence on Facebook. Of those groups, 77 groups had Pages that recommended other forms of content through the Related Pages feature.

Using a clean instance of the Chrome browser and without logging into an account so as not to bias Facebook’s recommendation algorithm with browsing history or user preferences, TTP visited the Facebook Pages linked to these 77 groups and recorded the Related Pages. As the visualization shows, the Related Pages frequently directed people to other forms of extremist content, in some cases to other organizations designated as hate groups by the Southern Poverty Law Center and Anti-Defamation League.